Abstract

Spectral imaging modalities, including reflectance and X-ray fluorescence, play an important role in conservation science. In reflectance hyperspectral imaging, the data are classified into areas having similar spectra and turned into labeled pigment maps using spectral features and fusing with other information. Direct classification and labeling remain challenging because many paints are intimate pigment mixtures that require a non-linear unmixing model for a robust solution. Neural networks have been successful in modeling non-linear mixtures in remote sensing with large training datasets. For paintings, however, existing spectral databases are small and do not encompass the diversity encountered. Given that painting practices are relatively consistent within schools of artistic practices, we tested the suitability of using reflectance spectra from a subgroup of well-characterized paintings to build a large database to train a one-dimensional (spectral) convolutional neural network. The labeled pigment maps produced were found to be robust within similar styles of paintings.

Similar content being viewed by others

Introduction

The development of spectral macroscale mapping modalities has provided conservators, scientists and art historians with the ability to examine the distribution of pigments across works of art with unprecedented detail. This allows for a more robust understanding of an artist’s creative process, and helps answer certain art historical research questions. Importantly, it also informs conservators and museums on how to better preserve these works based on their materiality, propensity for degradation, or even by identifying degradation products of processes already occurring. The availability of pigment maps for a work of art, where each class is labeled as a specific pigment or pigment mixture, greatly enhances the ability for conservators to analyze paintings.

Currently the most widely used macroscale imaging modalities for art examination are imaging X-ray fluorescence (XRF) spectroscopy [1], and reflectance hyperspectral imaging (typically 400 to \(\sim 1000\) nanometer (nm) and sometimes out to 2500 nm) [2], otherwise known as reflectance imaging spectroscopy (RIS). These two modalities provide complementary information that can be used to identify and map many of the pigments over a painting’s surface [3]. Both modalities consist of numerous narrow spectral band images, thus creating a 3-D image cube, where the first two dimensions are spatial, and the third dimension is spectral. This produces a spectrum at each spatial pixel in the image cube. The processing of these data cubes has focused on grouping spatial pixels having similar spectral information, allowing visualization of locations on a painted surface that may share a chemical makeup. While XRF data can be processed readily to make elemental maps [4], the direct translation of these into labeled pigment maps is, in general, not possible, as the same element can often be found in more than one pigment (though exceptions occur, such as the element mercury which can usually be assigned to the pigment vermilion in a painted object). Analysis of RIS data cubes of paintings is more challenging, and has typically utilized workflows and algorithms developed for remote sensing of minerals and vegetation.

Generally in remote sensing, the exploitation of reflectance image cubes to make classification and/or material maps has been an active area of research for decades, utilizing both physics-based and data-driven algorithms [5,6,7]. Classification maps help segment large reflectance image cubes into a discrete set of representative spectra (known as endmembers or classes). A classification map groups related spectra that comprise a given class, but does not identify the specific materials present. A material map goes further and identifies the specific materials (e.g. minerals) that make up each class.

A typical workflow in remote sensing for automatically labeling classes into materials requires a priori knowledge of the area imaged, specifically, sufficient knowledge of what materials are to be expected must be known so that the appropriate spectral library of pure materials can be selected. Then one of a variety of algorithms can be used to find the best library spectral match to the spectral endmember for each class. This approach requires libraries that consist of a handful of spectra for each known pure material in the area imaged [8,9,10]. The success of this approach is limited when there are variations in the reflectance spectra of a material – such as those caused by variation in particle size – in the area imaged that are not present in the spectral library [11].

If the spectra are linearly mixed, that is if the endmember spectrum is from a pixel that covered a portion of area imaged consisting of more than one material that are spatially separated, then the endmember spectrum can be fit by an area-weighted linear combination of the pure materials as found in the library. If however the materials are mixed intimately, resulting in light that does not simply reflect off one material and into the hyperspectral camera but instead is reflected and/or absorbed by the other adjacent materials (i.e. scattering) before entering the camera, then the measured spectrum is not in general a weighted linear sum.

For intimate mixtures a non-linear unmixing model is required to correctly assign the materials present in each class and thus make an accurate material map [12]. Two types of models have evolved over time. The first are the physics-based models that require knowledge of physical and optical properties of the materials in the mixture, as well as information about the pigment stratigraphy [13, 14]. Approximations are often made in such models to reduce the amount of detailed information required.

Alternative data-driven models have evolved in part as a solution to these challenges of intimate mixing. Because neural networks and deep learning models can model non-linear functions, these models have recently been applied to the remote sensing RIS classification challenge with growing success [15, 16]. However, to create accurate material maps with convolutional neural networks (CNN), large labeled reflectance databases (training datasets) are required. Spectral signature libraries of pure materials typically do not contain sufficient sample diversity to create robust material maps when a class is comprised of an intimate mixture. Currently only a handful of open-source, labeled, remote sensing RIS datasets are available for developing classification models, and they are limited to one area imaged with less than 20 unique classes each (e.g Salinas, Indian Pines, Pavia datasets [17]). Several studies have been performed which indicate that neural networks can outperform traditional un-mixing methods when applied to RIS remote sensing data [18,19,20,21].

To date the majority of RIS data sets of paintings have been analyzed with linear mixing algorithms in order to create classification maps. The most commonly used workflow is the Spectral Hourglass Wizard (SHW) in the Environment for Visualizing Images (ENVI) software [22,23,24]. The application of this workflow has been most successful when identification of the clusters which define potential classes is done manually, by an experienced user, in a reduced dimensional space with a subset of the spectra from the RIS data cube. Such processing, while successful, is also time-consuming [2, 25]. Other automatic and more rapid algorithms to generate the class maps have shown promise but tend to only find about 70 to 80% of the classes in real paintings [25]. All of these algorithms utilized in these workflows assume linear mixing and mixtures of pigments are treated as a single paint (a relatively consistent mixture of colored pigments) and hence a unique material. Labeling of the paint classes into their component pigments (i.e., labeled pigment maps) is done either by identifying characteristic reflectance spectral features or by spatially fusing the class maps with results from other analytical methods, e.g., XRF, extended-range reflectance (near-ultraviolet, near-infrared, and mid-infrared), and Raman spectroscopies, which provide more detailed chemical information. One promising non-linear unmixing model uses a convolution neural network architecture to separate X-ray images of artwork painted on both sides of their support [26].

Other approaches that have been explored in the analysis of RIS image cubes from paintings skip the classification step in order to directly assign the pigments present. Among these is the Kubelka-Munk model [27] which can predict the reflectance spectra for intimate mixtures of pigments in optically thick paint layers from a weighted sum of the ratios of the absorption (K(\(\lambda\))) and scattering (S(\(\lambda\))) coefficients of each pigment. The pigments in the mixtures are thus determined by a least squares fitting of the unknown reflectance spectra using a library of K(\(\lambda\)) and S(\(\lambda\)) coefficients for the pigments expected to be present. These approaches have yielded good success for paint-outs and model paintings prepared from the pigments in the reference library, but have had limited success on real paintings [28, 29]. This is likely due to a variety of factors including the fact that in the visible spectrum region more than one mixture of pigments can provide a good fit. An interesting proposed work-around to this problem is the use of a neural network to pre-select the pigments for the least squares fitting of the K & S parameters from a library [14]. The wide array of artists’ methods for achieving a particular visual appearance (such as using a lower paint layer to create specific optical effects), however, rely on the use of a variety of materials and mixtures used, pigment particle sizes, and paint layer thicknesses, which present a major difficulty when implementing the Kubelka-Munk approach.

Analysis of paintings by conservators and conservation scientists over the years has documented the diversity of paintings by individual artists and artistic schools throughout history. There is a widespread use of pigment mixtures and layered paint structures (stratigraphies) in paintings from the late medieval through to the current time. Artists used materials from different sources and often combined pure pigments to expand the range of colors, or hues, available to them. In any given area of a painting, there may be anywhere from a single layer of paint to a highly complex stratigraphy of a preparatory or ground layer (often chalk or gypsum), one or more paint layer(s), colored transparent glazes, and varnish layers. In RIS the paint stratigraphy cannot be ignored since deeper layers can become visible in the deep red to near-infrared spectral region (\(\lambda > 600 \,\hbox {nm}\)) owing to the decreased electronic absorption and light scattering of the pigment particles. Since the layered structure of a painting and the pigments that comprise each layer are not known in advance, a priori physics-based modeling is challenging for these complex datasets, especially since a robust open source two-parameter (absorption and scattering) spectral library of pigments is not available (although reflectance spectra of paints made using historical recipes pigments containing a limited number of pigments have been measured) [30].

To overcome the limitations of applying physics-based models for intimate mixtures found in paintings (lack of sufficient information on the optical properties of the pigments likely present and their stratigraphy), in this study we have chosen to explore a single-step data-driven solution to pigment labeling of reflectance spectra from RIS data. However, like the data-driven solutions for non-linear mixing in remote sensing, a large training dataset is needed to train the network models. Furthermore, the spectral training database for a neural network model must include the cases of intimate pigment mixtures, making these databases even larger than those for physics-based models.

While fine art painting is a highly creative human endeavor, examination of real objects suggests that artists did follow some patterns in working with materials to achieve desired colors and visual effects. For example, the fast-drying paints used in tempera painting (painting using a water-soluble paint binder such as egg yolk or gum arabic) could not be blended and reworked in the way that the slower drying oil paints offered. With the adoption of drying oils, the number of paint layers increased from a few to tens of layers. Materials also changed, minerals, plants and insects provided many pigments before chemical manufacture of pigments in the 18\({\mathrm{th}}\) century dramatically changed what was available and used by artists. Thus, in general, the pigments and pigment mixtures, paint thicknesses, and the number of layers encountered in a painting is expected to vary with the materials available and artistic practice at any point in time, and in a somewhat predictable manner.

The adherence to a set of practices, and use of particular sets of artist materials, often overlaps with defined artistic schools (defined historically or geographically). This fact offers a possible solution to making a robust training library for a data-driven model for directly labeling pigment maps from RIS data cubes of paintings. In this paper we explore the suitability of building a training dataset from regions of well-characterized paintings for an end-to-end supervised one-dimensional convolutional neural network (1D-CNN). The 1D-CNN architecture was chosen due to state of the art performance when using 1D signals [31]. Spectra from paints containing single or multiple pigments are collected for the training library to incorporate the inherent variability in the data. This leverages the inference that for a collection of related paintings, artists follow a similar, but not identical, working process. Such training sets can therefore be expected to contain most of the diversity in hue and intensity required for robust classification, which is not found in pigment libraries. When new RIS image cubes are processed using the 1D-CNN model, they will be labeled as containing particular pigments, creating a material map in a single step.

To test the pigment maps created by the 1D-CNN model, test cases based on paintings in 14\({\mathrm{th}}\) century illuminated manuscripts were used. The resulting 1D-CNN was assessed in two ways. First, the model’s mean-per-class-accuracy was computed to evaluate the performance of the model. Secondly, the model’s results were compared to those obtained via the more common, two-step approach (spectral classification followed by labeling of pigments present based on additional information) to verify the accuracy of the model. Paintings from two illuminated manuscripts were used to test the robustness of the model.

Results

Data and experimental Setup

The workflow to create a neural network with an appropriate training dataset and to produce labeled pigment maps of paintings is outlined in Fig. 1 and consists of four steps: 1. collect a sufficiently large spectral training dataset in which the pigments for each spectra are labeled; 2. create a neural network to predict pigments present in the input RIS spectra; 3. validate the accuracy of the network (predictions of pigments present) with a hold-out sample (10% of the training data); and 4. test the network prediction of pigments present on two well-characterized paintings that were not part of the training dataset.

In order to build a reasonable pigment labeled reflectance spectral training dataset for a given artistic school, paintings from which training data are selected must meet several constraints. They must be painted using a similar suite of materials, and generally with similar painting methods with respect to ground application (or absence thereof), degree of layering, degree of pigment mixing, etc. as described above. They need not, necessarily, be painted by the same artist, so long as these general criteria are met. Having reflectance data from the work of several artists who paint using similar methods may make the training data more robust. Manuscript illuminations (the painted images found within early books) have been widely analyzed by RIS [32,33,34] and provide an ideal test case for the approach used here. We have therefore selected paintings from a single book likely executed by a small number of artists, all with access to similar pigments, and following similar painting techniques with respect to pigment mixtures and glazes (that is, operating in the same general school of artistic practice).

Additionally, the set of pigments used in manuscript illumination is relatively limited, and well-studied, making it possible to confidently identify examples of the most commonly encountered pigments, pigment mixtures, and painting techniques [35,36,37,38,39]. For example, purple pigments can be derived from natural materials such as mollusks, lichens or dye plants, or by using mixtures of blue pigments (e.g. azurite, ultramarine, indigo) with red lake pigments (such as carminic acid or brazilwood) to create purple hues. Similarly, blue pigments were often mixed with yellow pigments (lead tin yellow or yellow dyes precipitated onto substrates) to expand the range of copper-based green materials available to an illuminator. The possible combinations of materials could create variation even within a single object in the painting. To model the three-dimensional form of a blue azurite robe, for example, lead white could be mixed in larger amounts to achieve highlights on the robe, or a transparent red lake could be layered on top of the blue to define purplish shadows. Both mixing and layering can contribute to the non-linear mixing effects evident in reflectance spectra from such areas.

The reflectance training dataset created for the 1D-CNN consisted of spectra collected from four well-characterized paintings from an illuminated manuscript containing many of these commonly encountered materials and mixtures. The manuscript chosen for this work was the Laudario of Sant’Agnese (c. 1340), one of only three surviving illuminated books of this type (a laudario is a collection of hymns of praise), and which has individual illuminations (described as paintings throughout this paper for clarity) by at least two artists, which are now dispersed in several collections around the world [40,41,42]. The paintings used to build the training set (Additional file 1: Figure S1) include:

-

1

The Martyrdom of Saint Lawrence, Pacino di Bonaguida, about 1340, Tempera and gold leaf on parchment. Getty Museum, Los Angeles, Ms. 80b (2006.13), verso

-

2

The Ascension of Christ, Pacino di Bonaguida, about 1340, Tempera and gold leaf on parchment. Getty Museum, Los Angeles, Ms. 80a (2005.26), verso

-

3

The Nativity with the Annunciation to the Shepherds, Master of the Dominican Effigies, c. 1340, miniature on vellum, National Gallery of Art, Washington, D.C., Rosenwald Collection, 1949.5.87

-

4

Christ and the Virgin Enthroned with Forty Saints, Master of the Dominican Effigies, c.1340, miniature on vellum, National Gallery of Art, Washington, D.C., Rosenwald Collection, 1959.16.2

These paintings from the Laudario have been studied in great detail to determine the pigments and paint mixtures used as well as the artists’ working methods. The illuminations in the collection of the J. Paul Getty Museum were extensively studied for the 2012-2013 exhibition Florence at the Dawn of the Renaissance: Painting and Illumination 1300–1350 using point-based analysis techniques (XRF, Raman spectroscopy and microscopic examination), broadband infrared imaging (900–1700 nm) and ultraviolet light induced visible fluorescence photography [34]. More recently these folios have been re-examined by RIS, XRF mapping (also referred to as scanning macro-XRF spectroscopy or MA-XRF), as well as point-based fiber optic reflectance spectroscopy (350–2500 nm) for this work. The point analysis data was combined with the RIS data and XRF maps to define regions of the data cubes where similar pigments are present. The results of all of these studies have been summarized in the Additional file 1: Table S2. The two works in the collection of the National Gallery of Art have also been previously studied for the Colour Manuscripts in the Making: Art and Science conference (2016, University of Cambridge) and the RIS image cubes have been classified and labeled with the pigments determined to be present either from the RIS spectra and/or from the results of site-specific XRF and fiber optic reflectance spectroscopy (350–2500 nm) [25, 33].

In constructing the training spectral dataset, regions in the RIS cubes having the same spectral shape and known pigment composition were selected both within a given painting as well as among all four paintings. The labels of the training dataset represent the pigment(s) whose spectral signature(s) dominate(s) the spectra (i.e., with the effects of the substrate and presence of ad-mixed white pigments included). Thus, an area containing mostly azurite will be described as belonging to the pigment category “azurite” (even if there is a small quantity of, for example, a white, black, or other-colored pigment), while an area containing a fairly equal mixture of azurite and lead white might be described as “azurite/white” when the amount of white present begins to noticeably alter the spectrum. As a result, the training dataset incorporates the effects of variations in paint layer thicknesses and mixtures that incorporate white pigments (lead white, chalk, etc). The only paint mixture excluded in the training dataset is that of the flesh. The omission of the flesh tones was done purposely as they represent a small area of the paintings and their composition is known to differ among the artists who painted each painting used for the training [34].

Example of building the training datasets. a Regions of interest selected in the The Nativity with the Annunciation to the Shepherds, Master of the Dominican Effigies, c. 1340, National Gallery of Art Miniatures 1975, no. 7, Rosenwald Collection. b The spectra of the brown ochre class collected from all four paintings showing the spectral variability. The black dotted line is the average spectrum for brown ochre

Figure 2a displays a representative image indicating the locations from which reflectance spectra were extracted from one of the paintings, The Nativity with the Annunciation to the Shepherds. Selected areas were not averaged; each spectrum was treated as an individual feature. In total, 25 classes (paints) were identified. These classes consisted of both pure pigments (where “pure” is used to describe paints where spectra are dominated by one pigment) or “mixed” pigments (where there are two pigments contributing to the spectral signature). The mean spectra of all classes can be seen in the Additional file 1: Figure S2, and represent the diversity of pigment and pigment mixtures observed in these paintings. A total of more than 300,000 individual spectra were collected across all four paintings.

Since not all pigments or mixtures are as abundantly used as others, there were several classes where a limited number of samples was collected (e.g. 40 green earth vs. 61092 azurite samples per class). For the model to formulate general rules and not over-train on the larger classes, the training data were reduced to 16,683 spectra with the number of samples per class more evenly distributed. This was accomplished by iteratively removing similar spectra (based on Euclidean distance as a measure of similarity) in order to conserve the variability in the training spectra. Even though other distance measures (e.g. Mahalonobis, Hausdorff, spectral angle, etc.) could have been used, a simple Euclidean distance is a common metric for assessing spectral similarity in hyperspectral imagery and is used here. Visualization of the reduced number of spectra vs. all the spectra for a given class showed sufficient variability to justify this approach. However, for other paintings or sets of pigments these other distance measures could be considered to improve separability and will be addressed in future work. Thus, for each class with more than 1000 spectra, a spectrum was selected at random, and the 100 most similar spectra to the chosen spectrum were removed from the class. This was repeated until each large class was reduced significantly. Class sizes, model accuracy and labels can be seen in the Additional file 1: Table S1. Note the lower accuracy of “Ochre yellow”. This is probably due to the similarity in spectra between yellow and orange ochre (see average spectra of both in the top left plot in the Additional file 1: Figure S2). Figure 2b displays the reduced number of spectra of brown ochre; the dotted line shows the average of all plotted spectra. The spectral variability within this pigment can clearly be seen in the plot. The one distinct outlier visible, with higher reflectance from 400 to 550 nm, and was probably mis-labeled in the original collected training spectra. Cases similar to this one, where one or more spectra in the training data may be incorrectly identified as belonging to a given pigment category, is due to the method used to extract spectra for the training data, wherein spectra from related areas were defined with the same pigment category label. The mean spectrum of each pigment category is plotted in Additional file 1: Figure S2, and correspond well to the expected reflectance curve of the pigment(s) named in the category label.

Performance evaluation of the 1D-CNN model

The degree of success of the 1D-CNN model was evaluated in two ways. The first method was a quantitative model performance evaluation and examines the robustness of the neural network itself. The second provided insight as to how well the 1D-CNN model produces accurate labeled pigment maps. This is done by comparing the resulting maps with those generated using the more traditional method (i.e classification of the same RIS cube using ENVI-SHW followed by labeling the classes in terms of pigments either from RIS spectral features or fusing the class maps with other data), described in this paper as truth maps.

Quantitative model performance evaluation

The first method, to validate the performance of the neural network on the training set created using the four paintings, applied 10-fold cross-validation to estimate model performance, with results averaged. The k-fold cross-validation is a method used to evaluate machine learning models, where the training data is split into k groups. The 1D-CNN is then trained on k-1 groups and tested on the hold-out group. This is repeated for all k groups and the results averaged to produce a less biased estimate of the model’s performance [43]. To calculate the results of each of the k models, mean-per-class-accuracy was used. This method, used when training data have unbalanced sets (classes with different amounts of training data), reports the average of the errors in each class, thus giving similar weight to each class and preventing larger classes from dominating results. Thus the mean per class accuracy for each of the 10 models created using cross-validation was averaged to calculate the final model performance.

The overall mean per-class accuracy (averaged across the 10-fold cross validation results) for the 1D-CNN was 98.7%. Results for each pigment or mixture class can be seen in the Additional file 1: Table S1. Model performance based on this metric shows very good results for all classes.

Comparison of 1D-CNN pigment labeled maps versus truth maps

After training, the 1D-CNN model was applied first to the Pentecost, Fig. 3a, another painting from the Laudario of Sant’Agnese, the same illuminated book from which the paintings used to create the training dataset were obtained. The output of the 1D-CNN consists of 25 maps, one for each of the pigment classes in the training dataset. The intensity at each pixel in a given map is the probability of a match between the RIS spectra at that spatial pixel and the pigment class as determined by the 1D-CNN model. Each of the labeled pigment maps were thresholded to 0.99 or greater probability to construct the composite pigment labeled map in Fig. 3d. This reduced the number of pigment-labeled classes from the possible 25 to 13. A high threshold of 0.99 was chosen to reduce the number of false-positive assignments. In the final composite pigment labeled map, the classes are color coded and labels as shown in Fig. 3b. The black background represents spatial pixels where none of the 25 labeled pigment classes had a probability at or above 0.99. Inspection of the composite map and color image reveals not all of the pixels were assigned to a pigment class. Decreasing the threshold from 0.99 to 0.85, as shown in the Additional file 1: (Figure S3), did assign unclassified areas to the correct pigments, but at the expense of increased false positive identifications (e.g. parchment classified as lead tin yellow). As noted, the areas of flesh were not included in the training datasets, thus no labels were assigned to the flesh. Nevertheless the majority of the painted areas have been assigned to a labeled pigment class.

Comparison of pigment labeled maps. a Color image of Master of the Dominican Effigies, Pentecost, about 1340, The J. Paul Getty Museum, Los Angeles, Ms. 80, verso. Digital image courtesy of the Getty’s Open Content Program. b Table of pigment labels for the truth map (refer to Additional file 1: Table S2) and the 1D-CNN map. c Truth pigment map. d 1D-CNN map

The composite color coded pigment labeled map of the Pentecost obtained using the traditional methods, the truth map, is shown in Fig. 3c and labeled pigments found in these classes is given in the 1st column in Fig. 3b. A detailed table summarizing the information used to identify the pigments in the spectral classes found using the ENVI-SHW is given in the Additional file 1: Table S2. The colors of the labeled classes were chosen to roughly represent the color of the actual paint. The 1D-CCN model’s color composite map, displayed in Fig. 3d, used a color scheme where the same color is used as the truth map if pigments were the same, which can also be seen in the second column of Fig. 3b. Comparing Fig. 3c, d (or the two columns of Fig. 3b) shows that the 1D-CNN model correctly labeled the pigments in most of the paints. For example, the paints dominated by a single pigment – azurite, lead tin yellow, gold, ochres, red lead, vermilion, green earth and red lake—were all correctly labeled.

For mixed pigments the 1D-CNN model provided both correct and some incorrect assignments. The 1D-CNN model correctly labeled pixels when the degree of saturation of a color varied over a fairly large range, for example the high and medium saturated blue robes. In both colors, the same primary pigment, azurite, was used but mixed with varying amounts of lead white. For the two areas where ultramarine and azurite were used together, the lighter portion of the dome directly above Mary and the lighter blue robe of the apostle in the bottom right, the 1D-CNN model only correctly labeled the lighter portion above Mary, but not the very pale (unsaturated) robe. Interestingly, the light blue robe of the apostle at the bottom right of Fig. 3d identified a small feature represented by only a handful of spatial pixels as part of the “Red lake” pigment category (shown in pink in Fig. 3d), which at first glance, appears as though it might represent a miss-classification. However, after further visual investigation, this allocation was confirmed: in the areas classified as “Red lake,” reflectance spectra do indeed indicate that an organic red colorant may be present as a layer over the blue and lead white mixture to render the shadow folds in the robe.

The green paints of the robes proved the most challenging for the 1D-CNN model. The truth map as well as magnified examination of the painting shows a yellow green-base layer onto which a deeper green paint was layered, which helps define the three-dimensional shape of the green-robed figure at bottom center. The yellow-green base paint was found to be a mixture of lead tin yellow (type II), ultramarine, and likely a copper-containing green pigment (see Additional file 1: Table S2) and the deeper green as a mixture of lead tin yellow with an unknown copper green. Neither of these mixtures is present in the training dataset, however visual inspection of the mean spectra of the yellow-green paints in the dataset indicate the best spectral match would be with lead tin yellow mixed with azurite, due to the weak reflectance maximum at \(\sim 730\,\hbox {nm}\).

There are two other small details where the 1D-CNN provided pigment labels which prompted further investigation. These are illustrated in Fig. 4. The first concerns the left vertical portion of the red border. The top, right, and bottom part of the red outer border show a sharp inflection point at 564 nm, indicative of red lead. The RIS spectrum of the left vertical border (as pointed out by the green bifurcated arrow in Fig. 4a) shows a sharp inflection at 558 nm consistent with red lead, although blue shifted, but it also shows a weak reflectance peak at approximately 740 nm and rising reflectance starting at 850 nm.

These results suggest the presence of a second pigment along the red outer border although assignment by RIS alone is not possible. The 1D-CNN model recognized a difference between the left edge and the other sides of the red outer border, although it labels the left edge as ochre, rather than red lead, azurite. Inspection of the copper (Cu) elemental distribution map obtained from XRF mapping shows that copper is associated with the blue azurite inner border as shown in Fig. 4c. On the border’s left edge, copper is present in a wider line than what is currently visible in the color image, and indicates azurite is present below the left portion of the red outer border. Visual inspection of the color image shows some blue paint is just visible at the top edge of the border (green arrow) (Fig. 4b). Thus, while not correctly assigning the pigments (since this combination of red lead and azurite was not in the training dataset), the 1D-CNN model did assign the most logical pigment based on the RIS features, and correctly noted the distinction between this area and the remainder of the red lead border.

The second detail of interest is the shadowed side of the white square spire (Fig. 4d–f) which appears as a light gray blue in the color image and was labeled as “indigo” by the 1D-CNN model, shown in teal in detail in Fig. 4d. This area appears to actually contain a small amount of a copper-containing pigment (likely azurite, since the area has a blue-gray cast), as suggested by the copper distribution obtained from XRF mapping (in Fig. 4f). This shadowed area was missed in the classification step for the truth model. Spectra from this area have an overall lower reflectance (by a factor of 2) and weak absorption features that suggest a small amount of earth pigment was additionally added to the white. Taken together, the RIS and XRF data suggests that the area may actually be a complex mixture of lead white, ochre, and trace amounts of azurite. This three-part mixture is not in the training set, so although the shadowed side of the spire was incorrectly ascribed to the indigo class, the 1D-CNN model distinguished a difference between this area and the rest of the white spire.

To further test the robustness of the 1D-CNN model a second painting, which comes from a Choir Book (Gradual) series painted by Lippo Vanni, Saint Peter Enthroned, c. 1345/1350, was analyzed. Vanni, while from Sienna rather than Florence, is likely to have been familiar with the painting techniques and pigments used by the Florentine artists who did the paintings for the Laudario of Sant’Agnese.

Comparison of pigment labeled maps. a Color image of Lippo Vanni, Saint Peter Enthroned, 1345/1350, National Gallery of Art, Rosenwald Collection, Washington. b Table of pigment labels for the truth map (refer to Additional file 1: Table S3) and the 1D-CNN map. c Truth pigment map. d 1D-CNN map

As in the case of the Pentecost, a pigment-labeled truth map was constructed from first creating classification maps based on RIS spectra (400 to 950 nm) using the ENVI-SHW algorithm and then by fusing results from point analysis methods in order to turn the classification maps into labeled pigment maps (see Additional file 1: Table S3 for details). The 1D-CNN model was applied to Saint Peter Enthroned to determine the model’s generalizability to a painting not in the Laudario, but which is expected to contain similar materials. The reference color image, truth and 1D-CNN composite maps along with the color-coded pigment labels are given in Fig. 5. The data demonstrate that the paints dominated by a single pigment were correctly identified even when lead white was present. Specifically the areas containing azurite, lead white, vermilion, and red lake were all correctly labeled. The areas of gold leaf, and the areas of exposed bole where the gold leaf is gone, were also correctly identified as gold and ochre (the primary coloring material of the clay bole underneath the gold), respectively. The 1D-CNN model incorrectly labeled the yellow as lead tin yellow although the truth pigment map indicates that a yellow lake is present, however yellow lakes are not present in the training dataset. The truth map shows the dark modeling of the richly decorated red cloth over St. Peter’s throne was painted with vermilion while the lighter parts were painted with a mixture of vermilion and red lead. The 1D-CNN model correctly labeled the vermilion. However, the mixture was labeled as only containing red lead because these areas had sufficient red lead character to differentiate them from pure vermilion, since the mixture was not in the training set.

There are three sets of mixed pigments in Saint Peter Enthroned, two greens and an orange-red. As shown in the truth map, the green paints (Fig. 5c) are made from a yellow lake with azurite denoted with a lighter green, and with a yellow lake, azurite, and indigo for the cooler, darker green. The labeled pigments returned from the 1D-CNN model (Fig. 5d) returned two greens composed of a yellow mixed with a blue pigment and the model returned the correct blue pigment in both cases. However, since no mixture of a yellow lake with these two blue pigments existed in the training data, the model gave as the best match lead tin yellow mixed with the specific blue pigment. This is not surprising as the spectral shape is dominated by the blue pigment present. The labeled composite truth map shows that the red border contains a mixture of red lead and vermilion, just like the lighter red portion of the cloth over the throne. The 1D-CNN model correctly identified these two pigments individually in the border, identifying primarily red lead on the right side of the image, and vermilion on the far left. The model did not classify them as a mixture since there was no mixed red lead and vermilion class in the model. This result reinforces the notion that identification from the model can only be as exact as the training data. As such, these results will always need to be presented with some indication as to the limits of interpretability. However, as more paintings are studied, the training set can be augmented to develop a more robust solution.

Discussion

The objective of this research was to determine if a data driven, rather than a physics-based, solution to pigment labeling of reflectance spectra would be sufficient. The motivation for testing this was not because physics-based solutions to intimate mixing are not robust enough, but rather because obtaining the information to implement the physics models are challenging due to the complexity of paintings. Specifically, obtaining the optical properties of the pigments used, the optical thickness of paint layers present and accounting for the possibility of glazes over the paint layers pose difficulties. While destructive micro sampling can provide such information, even well-studied paintings are sparsely sampled and no robust non-invasive methods exist currently to obtain these parameters across the surface of a painting. The data-driven solution explored here gets around this problem but requires a large pigment labeled dataset, as all learning frameworks do.

The approach taken here is to constrain the size of the training dataset by developing them for specific artistic schools, which are often defined by a rough set of pigments and subset of mixtures and more specific painting processes. That is, only subsets of mixtures and layering are expected within these schools instead of all possible combinations. Finally, rather than attempting to build a robust training dataset by making contemporary paint-outs, the central idea is to utilize well-characterized historic paintings to define a number of classes and encompass the needed diversity, while simultaneously ensuring the use of historic pigments, supports (such as parchment), and paintings grounds. This approach is inherently attractive because it leverages the large amount of existing scientific data on particular paintings, suggesting that enough truth information is available to allow the creation of appropriate training datasets for a number of artistic schools. Where the predictions of labeled spectra break down using this approach, the dataset can be refined, but the “failures” are likely new areas worthy of further study.

The limitations of this approach are twofold. First, it cannot be expected to work well on all paintings, given that many exist at the boundaries between schools of artistic practice. However, the understanding of a given artist or artistic school does not require a rigorous understanding of each set of work in the school, but more often the common elements between them and where they differ. The approach proposed here, even with its limitations, is consistent with these goals. The second limitation is that while a physics-based model can give concentrations of the pigments, the data-driven approach to directly label pigments proposed here will not. Nor will the proposed data-driven approach provide quantitative information about the mixtures present nor find all the pigments present. Physics-based models are better suited for these goals. However, in the conservation and art historical fields there has been limited need for such detailed information, except for a small class of paintings where the degradation over time has resulted in large imbalances in the color appearance of specific pigments. In these cases, the focus is typically on getting quantitative information for one or two specific pigments based on highly detailed studies, not of the whole painting. For the vast majority of studies, then, the lack of quantitative data is unlikely to be a hindrance to uptake of the method.

In this paper the proposed data-driven approach for the analysis of RIS image cubes was applied to four well studied paintings from the same book, which could be well-described by 25 pigment labeled classes, were sufficient to build the training dataset for \(14{\mathrm{th}}\) century paintings found in illuminated books from the early Italian Renaissance by artists in or near Florence. The trained 1D-CNN model was then applied to a painting from the same book that contained the four paintings used for training, as well as a painting from a different book and artist who worked outside of Florence in Siena. Results were encouraging when compared with truth which was obtained by labor intensive analysis by expert users. This makes it likely to become a valuable addition to the workflow of museum-based scientists and conservators.

For both of the tested paintings, the 1D-CNN model correctly labeled all but one of the classes in which a single pigment dominated the reflectance spectra. Of the 28 truth pigment allocations across both paintings (17 in the Pentecost and 11 in the Saint Peter Enthroned), the 1D-CNN model correctly identified 19. However, of the 9 incorrectly classified pigments/mixtures, 7 were not part of the training dataset. When these incorrect (but not surprising) classifications are discounted, the model misclassified only 2 classes. These results point to a limitation of the 1D-CNN model, as with most artificial intelligence models, that when a pigment is encountered for which the model was not trained on, for example the yellow lake that was labeled as lead tin yellow, the model fails. The model handles well cases where pure pigments were mixed with varying amounts of lead white, and thus appears to be robust in situations when the saturation is varied, so long as these cases are in the training data. The model also handles the combination of ultramarine and azurite except for the case when the pigment concentration was low and the color very light. Between the two paintings there were four green paints made from mixtures. In each case, the 1D-CNN model labeled the greens partially correctly, owing to not having the right mixtures in the training data. Overall, the 1D-CNN model handled mixtures it was trained for well, and found matches that were spectrally reasonable for those it was not trained for. This illustrates both a benefit and risk. While the risk is clear (like all data-driven models, there is chance for model predictions to be wrong), the benefit is less obvious, but is particularly valuable in the field of art analysis. Fundamentally, incorrect assignments provide a place to start from to improve the training data. But it also provides an incentive for further research. In this work, the case where the model was wrong, such as assigning an ochre where red lead was expected in the red outer border in Pentecost, caused us to look more closely at this area, making close comparisons to other data and reexamining the painting itself, to find that the left edge of the border was painted over what was an “error” made by the artist while painting the inner azurite blue border. Hence such errors can be informative, particularly in cases where the examination of an object with RIS is the first step of several analysis methods. This is, indeed, the case in many cultural heritage studies.

Improving the training dataset is an iterative process and one that would improve the performance here. The challenge is to look at more complex mixtures and layers that arises from inherent variability of artists’ technique – including idiosyncratic mixtures and/or layering methods that one artist may apply – which may exclude the possibility of ever having a training set that can encompass all possible pigment mixtures. For example, the artists considered in this work employ different pigments and/or subtly different methods of painting flesh tones, depending on medium (e.g. painting on parchment vs. panel paintings), size, and/or moment in their artistic development; the extent to which this technique varies object-to-object and artist-to-artist continues to be a subject of study [44, 34]. The expected variation of flesh tone painting techniques suggests that, even within a single artistic tradition, some natural variability may make some paint compositions more difficult to identify than others, directly linked to whether an example is present in the training data. As noted in the results section, three-component mixtures similarly find use, and the lack of them in the training data provided one limit to the overall accuracy of the 1D-CNN predictions. Therefore, adding more unique mixtures will undoubtedly improve the results of the model. For example adding examples of the fleshtones represented in the manuscripts studied here.

To extend the model beyond this artistic tradition, of course, will require additional extensions: for example, a similar model could reasonably be built to examine \(19{\mathrm{th}}\) or \(20{\mathrm{th}}\) century oil paintings, but would necessarily require a different training dataset, and be subject to the same challenges as demonstrated here for \(14{\mathrm{th}}\) century illuminated manuscript paintings.

Even without these extensions, however, the potential to rapidly classify pigments in a collection of works of art from the same painter (or painters from the same general era and style), based on a model trained on a few well characterized paintings, creates the opportunity for classification and analysis of an entire collection within a short period of time and with less need for a trained expert user to supervise the initial labeling process of an unknown painting. As such, the 1D-CNN model provides an excellent first-pass analysis to help guide the researcher, and/or identify areas deserving of more focused study by an expert user or which will require additional detailed analysis by other analytical techniques. An example of this kind of highlighting of areas of interest include the initially painted portion of the border, and the shading of the spires in the Pentecost from the Laudario. This application of a 1D-CNN model, therefore, is expected to help conservators and conservation scientists more rapidly evaluate the materialty of objects under their care, allowing more rapid decision-making with respect to treatment and preservation options, as well as identifying areas of ongoing interest or concern.

Materials and methods

Reflectance imaging spectroscopy data collection

The RIS data used for creating the training dataset consisted of 209 spectral bands that ranged from the visible to near infrared (400 to 950 nm). The resulting image cube (2 spatial and 1 spectral dimension) were calibrated to apparent reflectance by subtracting a dark image from the collected reflectance data in digital counts, and dividing it by the illumination irradiance. The spectral component of the reflectance spectrum at each pixel was used as input features (model input data) to the 1D-CNN model.

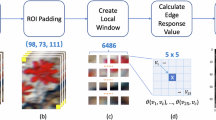

Network architecture

The 1D-CNN consists of 4 hidden layers. The architecture is displayed in Fig. 6. This was chosen after experimentation with different number of hidden layers, filters and kernel sizes did not produce better results. Exhaustive modelling was not done due to the very high model accuracy achieved with the chosen parameters. The input layer receives the initial data, which is the individual (labeled) spectra collected from the studied paintings. The first two hidden layers have two 1D convolutional layers with respectively 64 and 32 filters and kernel sizes of 5 × 5 and 3 × 3. This is followed by max pooling, where the hidden layers are down-sampled to reduce their dimensionality, keeping the maximum output of each second feature. Two fully connected (dense) layers of sizes 100 and 25 form the last two hidden layers. Each hidden layer uses the rectified linear unit (ReLU) activation function \(f(x) = max(0,x)\), thus retaining only the positive part of its input. The final output activation function, Softmax, takes the output values and changes them to probabilities between 0 and 1 with \(f(s)_i = \frac{e^s_i}{\Sigma _j^C e^s_j}\) where \(s_i\) is the score inferred by the neural net for each class in C. For this study, \(C = 25\).

The performance of the model was measured with categorical cross-entropy loss (log loss) function defined as \(CE = -\Sigma _i^C t_ilog(s_i)\), where \(t_i\) is the ground truth (label), and \(s_i\) the scores of the model for each class. For the categorical cross-entropy loss calculation (compared to binary cross-entropy), each label was coded as a one-hot vector since the neural network requires the label to be numeric. A one-hot vector is a zero vector the length of the number of classes, with the class represented as a 1 at the specific label number.

The model was trained with batch sizes of 50, and evaluated on a validation set of \(10\%\) of the training data. The training started with a learning rate of 0.01, which was decreased if after 4 epochs (cycle through full training dataset) the validation loss did not decrease. The number of epochs were set to 30. The model used the stochastic gradient descent optimizer to minimize the loss function. The neural network was coded in Python using TensorFlow’s Keras library [45].

Performance evaluation calculation

The 1D-CNN model accuracy was measured using the individual per-class results from 10-fold cross validation. Thus the overall accuracy of the model was calculated by averaging the mean-per-class results for each of the 10 cross-validation results. The mean-per-class accuracy measure is used when there are unbalanced sets (classes with different volumes of data).

Three additional classification methods were tested to compare the 1D-CNN model with alternative models commonly used for such datasets, namely 1. a Multilayer Perceptron (MLP) [46], 2. Support Vector Machine with radial basis kernel (SVM) [47], and 3. Spectral Angle Mapper (SAM) to assign the class with the smallest angle between the spectral library and each spectrum in the image. The 1D-CNN outperformed the others by 1.3% (MLP), 2.1% (SAM), and 6.4% (SVM) mean per-class accuracy respectively.

Point-based fiber optic reflectance spectroscopy

FORS spectra were collected in a non-contact configuration using an ASD Field Spec3 (Malvern Panalytical) which is sensitive from 350–2500 nm. The collection fiber is oriented at approximately 90 degrees to the painting surface, resulting in a collection spot size approximately 3 mm in diameter, and the illumination source is held approximately 10 cm from the surface at a 45 degree angle. The total acquisition time was less than 6 seconds per spot. The light level was approximately 5000 lux.

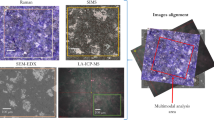

Scanning XRF spectroscopy

Scanning macro-XRF spectroscopy is a non-contact chemical imaging technique which captures information about the elemental composition of a two-dimensional area. In many cases, the pigments, metals, and other materials present in a work of art can be inferred from the elemental composition. Since the technique is X-ray-based, the elemental distributions often captures both surface and sub-surface information simultaneously. In macro-XRF-derived element distribution maps, brighter areas represent higher signal from an element. In this study, scanning XRF spectroscopy was done at the Getty Conservation Institute (GCI) using a Bruker M6 Jetstream (Rh tube, operated at 50 kV/\(400\,\upmu \hbox {A}\), \(450\,\upmu \hbox {m}\) spot size, \(440\,\upmu \hbox {m}\) sampling and a dwell time of 18 ms/pixel). A corrected excitation spectrum of the instrument was measured by Timo Wolff and data processing utilized the PyMCA and DataMuncher software suites. [4, 48, 49] The total area scanned on the Pentecost (not shown) was \(316 \times 404\) mm; details shown in Fig. 4 show spatial subsets of this scan, with histograms stretched to emphasize weak features.

Availability of data and materials

The datasets used and/or analyzed during the current study may be made available from the authors on reasonable request.

Abbreviations

- nm:

-

Nanometer

- XRF:

-

X-ray fluorescence

- RIS:

-

Reflectance imaging spectroscopy

- CNN:

-

Convolutional neural network

- SHW:

-

Spectral hourglass wizard

- ENVI:

-

Environment for visualizing Images

- 1D-CNN:

-

One-dimensional convolutional neural network

- Cu:

-

Copper

- ReLU:

-

Rectified linear unit

- MLP:

-

Multilayer perceptron

- SVM:

-

Support vector machine

- SAM:

-

Spectral angle mapper

References

Alfeld M, Janssens K, Dik J, de Nolf W, van der Snickt G. Optimization of mobile scanning macro-XRF systems for the in situ investigation of historical paintings. J Anal Atom Spectrom. 2011;26:899–909.

Delaney JK, Zeibel JG, Thoury M, Littleton R, Palmer M, Morales KM, de la Rie ER, Hoenigswald A. Visible and infrared imaging spectroscopy of Picasso’s Harlequin Musician: Mapping and identification of artist materials in situ. Appl Spectros. 2010;64:584–94.

Dooley K, Conover D, Glinsman L, Delaney J. Complementary standoff chemical imaging to map and identify artist materials in an early Italian Renaissance panel painting. Angewandte Chemie International Edition. 2014;126

Alfeld M, Janssens K. Strategies for processing mega-pixel X-ray fluorescence hyperspectral data: a case study on a version of Caravaggio’s painting Supper at Emmaus. J Anal Atom Spectrom. 2015;30:777–89.

Feret J-B, François C, Asner GP, Gitelson AA, Martin RE, Bidel LP, Ustin SL, le Maire G, Jacquemoud S. PROSPECT-4 and 5: Advances in the leaf optical properties model separating photosynthetic pigments. Remote Sens Environ. 2008;112:3030–43.

Schneider S, Melkumyan A, Murphy RJ, Nettleton E. Gaussian Processes with OAD covariance function for hyperspectral data classification. In: 22nd IEEE International Conference on Tools with Artificial Intelligence (2010), vol 1, pp 393–400.

Verrelst J, Alonso L, Camps-Valls G, Delegido J, Moreno J. Retrieval of vegetation biophysical parameters Using Gaussian process techniques. IEEE Transact Geosci Remote Sens. 2012;50:1832–43.

Mende A, Heiden U, Bachmann M, Hoja D, Buchroithner M. Development of a new spectral library classifier for airborne hyperspectral images on heterogeneous environments. In: Proceedings of the EARSeL 7th SIG-Imaging Spectroscopy Workshop 2011.

Rao NR, Garg PK, Ghosh SK. Development of an agricultural crops spectral library and classification of crops at cultivar level using hyperspectral data. Precis Agric. 2007;8:173–85.

Vishnu S, Nidamanuri RR, Bremananth R. Spectral material mapping using hyperspectral imagery: a review of spectral matching and library search methods. Geocarto Int. 2013;28:171–90.

Clark RN. Spectroscopy of Rocks and Minerals, and Principles of Spectroscopy. In: Andrew RAR, Rencz N, editors. The Oxford Handbook of Innovation. New York: John Wiley and Sons; 1999 chap. 1, 3–58.

Heylen R, Parente M, Gader PD. A review of nonlinear hyperspectral unmixing methods. J Select Topics Appl Earth Observ Remote Sens. 2014;7:1844–68.

Taufique Abu Md Niamul, Messinger DW. Algorithms, technologies, and applications for multispectral and hyperspectral imagery XXV, M. Velez-Reyes. In: Messinger DW (eds) International Society for Optics and Photonics (SPIE), vol 10986, 2019. p. 297–307.

Rohani N, Pouyet E, Walton M, Cossairt O, Katsaggelos AK. Nonlinear unmixing of hyperspectral datasets for the study of painted works of art. Angewandte Chemie. 2018;130:11076–80.

Han Y, Gao Y, Zhang Y, Wang J, Yang S. Hyperspectral sea ice image classification based on the spectral-spatial-joint feature with deep learning. Remote Sens. 2019;11:2170.

Ma L, Liu Y, Zhang X, Ye Y, Yin G, Johnson B. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J Photogram Remote Sens. 2019;152:166–77.

Datasets for Classification. http://lesun.weebly.com/hyperspectral-data-set.html, Accessed: 20 June 2020.

Licciardi GA, Del Frate F. Pixel unmixing in hyperspectral data by means of neural networks. IEEE Transact Geosci Remote Sens. 2011;49:4163–72.

Zhang X, Sun Y, Zhang J, Wu P, Jiao L. Hyperspectral unmixing via deep convolutional neural networks. IEEE Geosci Remote Sens Lett. 2018;15:1755–9.

Wang M, Zhao M, Chen J, Rahardja S. Nonlinear unmixing of hyperspectral data via deep autoencoder networks. IEEE Geosci Remote Sens Lett. 2019;16:1467–71.

Qi L, Li J, Wang Y, Lei M, Gao X. Deep spectral convolution network for hyperspectral image unmixing with spectral library. Signal Processing 2020. p. 107672

L3 Harris Geospatial Solutions, Spectral Hourglass Wizard. https://www.harrisgeospatial.com/docs/SpectralHourglassWizard.html, Accessed: 6 June 2020.

Arvelyna Y, Shuichi M, Atsushi M, Nguno A, Mhopjeni K, Muyongo A, Sibeso M, Muvangua E. IEEE Int Geosci Remote Sens Symposium. 2011;. https://doi.org/10.1109/IGARSS.2011.6049458.

Singh KD. Automated spectral mapping and subpixel classification in the part of Thar Desert using EO-1 satellite Hyperion data. IEEE Geosci Remote Sens Lett. 2018;15:1437–40.

Kleynhans T, Messinger D, Delaney J. Towards automatic classification of diffuse reflectance image cubes from paintings collected with hyperspectral cameras. Microchem J. 2020;157:104934.

Sabetsarvestani Z, Sober Barak, Higgitt Catherine, Daubechies Ingrid, Rodrigues M. Artificial intelligence for art investigation: Meeting the challenge of separating X-ray images of the Ghent Altarpiece. Science Advances. 2019;66:7416.

Kang Henry R, Theory Kubelka-Munk. Computational Color Technology. Chapter. 2006;16

Zhao Y, Berns R, Taplin L, Coddington J. An investigation of multispectral imaging for the mapping of pigments in paintings. Proceedings SPIE. 2008;6810

Zhao Y, Berns R, Okumura Y, Taplin L, Carlson C. Improvement of spectral imaging by pigment mapping. Final Program and Proceedings - IS and T/SID Color Imaging Conference 2005.

Fiber optics reflectance spectra (FORS) of pictorial materials in the 270-1700 nm range. http://fors.ifac.cnr.it/, Accessed: 3 June 2020.

Kiranyaz S, Avci O, Abdeljaber O, Ince T, Gabbouj M, Inman Daniel J. 1D convolutional neural networks and applications: A survey 2019. arXiv preprint arXiv:1905.03554

Mounier A, Daniel F. Hyperspectral imaging for the study of two thirteenth-century Italian miniatures from the Marcadé collection, Treasury of the Saint-Andre Cathedral in Bordeaux, France. Stud Conserv. 2015;60:S200–9.

Delaney JK, Dooley KA, Facini M, Gabrieli F. MANUSCRIPTS in the MAKING: Art and Science (Brepols Publishers, Fitzwilliam Museum in association with the Departments of Chemistry and History of Art University of Cambridge 2018.

Patterson Schmidt C, Phenix A, Trentelman K. Scientific investigation of painting practices and materials in the work of Pacino di Bonaguida. In: Sciacca C, editor. Florence at the dawn of the Renaissance: Painting and illumination 1300–1350. Los Angeles: Getty Publications; 2012. p. 361–71.

Aceto M, Agostino A, Fenoglio G, Idone A, Gulmini M, Marcello P, Ricciardi P, Delaney J. Characterisation of colourants on illuminated manuscripts by portable fibre optic UV-visible-NIR reflectance spectrophotometry. Anal Methods. 2014;. https://doi.org/10.1039/C3AY41904E.

Burgio L, Clark RJH, Hark RR. Raman microscopy and X-ray fluorescence analysis of pigments on medieval and Renaissance Italian manuscript cuttings. Proceedings of the National Academy of Sciences. 2010;107:5726–31.

Pallipurath A, Ricciardi P, Rose-Beers K. ’It’s not easy being green’: A spectroscopic study of green pigments used in illuminated manuscripts. Anal Methods. 2013;5(16):3819–24.

Melo M, Nabais P, Guimarães da Silva M, Araújo R, Castro R, Oliveira M, Whitworth I. Organic dyes in illuminated manuscripts: A unique cultural and historic record. Philosoph Transact Royal SoC A Mathemat Phys Eng Sci. 2016;374:20160050.

Aceto M, Arrais A, Marsano F, Agostino A, Fenoglio G, Idone A, Gulmini M. A diagnostic study on folium and orchil dyes with non-invasive and micro-destructive methods. Spectrochim Acta A Mol Biomol Spectrosc. 2015;142:159–68.

Kanter L, Drake Boehm B, Brandon Strehlke C, Freuler G, Mayer Thurman C, Palladino P. Painting and Illumination in Early Renaissance Florence 1300–1450 New York: Metropolitan Museum of Art 1994.

Keene B. New discoveries from the Laudario of Sant’Agnese. Getty Res J. 2016;8:199–208.

Keene B. Pacino di Bonaguida: A critical and historical reassessment of artist, oeuvre, and choir book illumination in Trecento Florence. Immediations 4(4) (2019).

Fushiki T. Estimation of prediction error by using K-fold cross-validation. Stat Comput. 2011;21:137–46.

Szafran Y, Turner N. Techniques of Pacino Di Bonaguida, Illuminator and Panel Painter. In: Sciacca C, editor. Florence at the Dawn of the Renaissance: Painting and Illumination 1300–1350. Los Angeles: Getty Publications; 2012. p. 335–55.

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Mané D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Viégas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y, Zheng X. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems 2015. Software available from tensorflow.org.

Shivarudhrappa R, Sriraam N. Optimal configuration of multilayer perceptron neural network classifier for recognition of intracranial epileptic seizures. Expert Sys App. 2017;89:205–21.

Amari S, Wu S. Improving support vector machine classifiers by modifying kernel functions. Neural Networks. 2001;12:783–9.

Solé V, Papillon E, Cotte M, Walter P, Susini J. A multiplatform code for the analysis of energy-dispersive X-ray fluorescence spectra. Spectrochim Acta B Atomic Spectros. 2007;62:63–8.

Wolff T, Malzer W, Mantouvalou I, Hahn O, Kanngießer B. A new fundamental parameter based calibration procedure for micro X-ray fluorescence spectrometers. Spectrochim Acta B Atom Spectros. 2011;66:170–8.

Acknowledgements

We thank Paola Ricciardi, Lisha Glinsman, Kathryn Morales, and Michelle Facini for XRF data collection and analysis of the NGA paintings. We would also like to thank Nancy Turner and Bryan Keene (Getty Museum) for helpful discussions.

Funding

This work was supported in part by the Rochester Institute of Technology, College of Science, Dean’s Research Initiation Grant (TK), the National Gallery of Art (JKD, KAD), and the Getty Conservation Institute (CMSP).

Author information

Authors and Affiliations

Contributions

TK and JKD conceived the research. TK developed, programmed, trained, and tested the neural network and automatic spectral angle mapping algorithms. JKD, CMSP and KAD collected the RIS, XRF data sets and did the analyses needed for the verification “truth data” sets. DWM oversaw all of the analysis methods. All authors wrote the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1: Figure S1.

The four paintings from the Laudario used to create the training dataset for the 1D-CNN model. a The Nativity with the Annunciation to the Shepherds, Master of the Dominican Effigies, c. 1340, miniature on vellum, National Gallery of Art, Washington, D.C., Rosenwald Collection, 1949.5.87, b The Ascension of Christ, Pacino di Bonaguida, about 1340, Tempera and gold leaf on parchment. Getty Museum, Los Angeles, Ms. 80a (2005.26), verso, c The Martyrdom of Saint Lawrence, Pacino di Bonaguida, about 1340, Tempera and gold leaf on parchment. Getty Museum, Los Angeles, Ms. 80b(2006.13), verso ( d) Christ and the Virgin Enthroned with Forty Saints, Master of the Dominican Effigies, c.1340, miniature on vellum, National Gallery of Art, Washington, D.C., Rosenwald Collection, 1959.16.2. Digital images of (b), (c). Table S1. Summary of per class accuracy for the 1D-CNN model. The 25 pigment/mixture classes and their total class size is given along with the per class accuracy using 10-fold cross validation.) courtesy of the Getty's Open Content Program. Figure S2. The average reectance spectra for each of the pigment labeled classes in the training dataset used for the 1D-CNN model. Figure S3. The pigment labeled map created by using a lower threshold (0.85) in the 1D-CNN. Table S2. Summary of analyses, the Pentecost. Note that the RIS features listed are those identified by an expert user following manual data exploration. When available, fiber optic reflectance spectroscopy (FORS) and Raman analysis may provide additional information about the total chemical composition of each area. However, not all pigments identified are discernible in the RIS data cube on which the 1D-CNN is applied. Therefore, a simplified “pigment class” column notes the materials that should be identified in the paint by this technique. Table S3. Summary of analyses, Saint Peter Enthroned. Note that the RIS features listed are those identified by an expert user following manual data exploration. When available, fiber optic reflectance spectroscopy (FORS) analysis may provide additional information about the total chemical composition of each area. However, not all pigments identified are discernible in the RIS data cube on which the 1D-CNN is applied. Therefore, a simplified “pigment class” column notes the materials that should be identified in the paint by this technique.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Kleynhans, T., Schmidt Patterson, C.M., Dooley, K.A. et al. An alternative approach to mapping pigments in paintings with hyperspectral reflectance image cubes using artificial intelligence. Herit Sci 8, 84 (2020). https://doi.org/10.1186/s40494-020-00427-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40494-020-00427-7