Abstract

Metaverse platforms have become increasingly prevalent for collaboration in virtual environments. Metaverse platforms, as opposed to virtual reality, augmented reality, and mixed reality, expand with enhanced social meanings within virtual worlds. The research object in this study is the chime bells of Marquis Yi of Zeng, one of China's most treasured cultural heritage sites. We aimed to create a metaverse platform for the chime bells of Marquis Yi of Zeng to provide visitors with a highly immersive and interactive experience. First, we collected the materials and data of the chime bells and other exhibits, as well as historical information. Then, the data were processed and integrated for 3D model reconstruction. In addition, we designed the virtual roaming system through which visitors could interact with the exhibits to obtain multimedia information and even knock to ring the chime bells. Finally, we built our system to connect multiple visitors in different geographic locations and encourage them to collaborate and communicate within the virtual space. This platform helps users visualize cultural heritage, simulates real-life tour experiences with intuitive manners of interaction, and motivates visitors’ interest in traditional culture. This research also reveals the potential use of metaverse-related techniques in cultural heritage sectors.

Similar content being viewed by others

Introduction

Chime bell music was an integral aspect of the ancient ritual and music culture of the early Western Zhou Dynasty, and this culture had a deep influence on succeeding generations. The chime bells of Marquis Yi of Zeng have the most complete known series of musical sounds of the Zhou Dynasty, and as a ritual instrument, they also contain a wealth of ideas on ritual and music culture. They are a brilliant epitome of Chinese civilization in the fifth century B.C. and a priceless artifact in the treasure house of human history and culture. The chime bells of Marquis Yi of Zeng are available for tourists to view only from a distance due to their uniqueness and nonrenewable nature, and visitors cannot touch or manipulate the chime bells and other exhibits in any manner while browsing [1]. This is not beneficial for visitors to learn more about the cultural legacy, nor is it conducive to the spread of traditional culture and exchanges among diverse civilizations. However, the development of modern technology offers the possibility of new ways to promote traditional culture.

This study focuses on creating an engaging and instructive museum environment in which visitors immerse themselves in the authentic hall of chime bells, interact with the exhibits, and even knock to ring the chime bells and create music. In addition, visitors from geographically distributed sites can collaborate and communicate in real time with a genuine sense of presence. It is an excellent way to motivate visitors’ interest in cultural heritage and enhance the museum experience.

We note that a shorter version of the conference paper was presented in [2]. In the current study, we optimize the modeling and visualization process with the PBR (physically based rendering), LOD (level of detail), and HDRP (high-definition render pipeline) techniques, which were not covered in the brief conference paper. In addition, we expand the digital museum into a metaverse platform with an extra multiplayer mode and assess and evaluate the system performance and user satisfaction with additional experimental sessions.

Related research on Web3D, desktop VR/immersive VR/AR/MR/metaverse in museums

To improve the preservation of the chime bells of Marquis Yi of Zeng and the dissemination of traditional culture, it is necessary to combine different modern technologies. The concept of virtual museums was established by Tsichritzis and Gibbs to overcome the disadvantages of a physical exhibition and to provide a vivid experience for remote visitors [1, 3, 4]. With the advent of web technologies, 3D technology (virtual reality (VR), augmented reality (AR), or mixed reality (MR)), and other technologies in recent years, the popularity of virtual museums has increased [5, 6].

Most applications implemented with the Web 3D technique or desktop VR achieve only the first level of immersion: they employ a regular computer screen with a keyboard and mouse to view and interact with the virtual museum [7]. Examples are as follows: Pavlidis et al. [8] presented a web-based virtual museum that hosted and displayed 3D models of musical instruments. Wang [1] used Maya, Unity, and JanusVR to develop a framework for the digital museum and memorial hall of Kangmeiyuanchao Zhanzheng (KMYC). Li et al. [9] used 3Ds Max to implement a roaming system for a museum of art at Qingdao Agricultural University through Unity, a powerful virtual reality platform. However, the first level of immersion generally could not provide users with the same vivid experience as the second level of immersion.

The second level of immersion is the sense of total immersion based on visualization and display, tracking and modeling, and sensor technologies [10]. The most basic configuration that makes this level feasible is a head-mounted display (HMD) and a controller [7]. Users can view the virtual world as if they were inside it and interact with the virtual world through the controller [7]. For example, See et al. [11] presented a VR cultural heritage experience, the tomb of Sultan Hussein Shah in Malaysia. In this work, the 3D model was reproduced using photogrammetry from the actual heritage site [11]. In addition, HMDs with sensors were introduced to make the room-scale VR experience possible [12]. Users could interact with objects in the virtual world (e.g., picking up objects) through the controller. Fernandez-Palacios et al. [13] presented three case studies: the tombs of Bartoccini, Inscriptions, and Bettini, in which an HMD and a Kinect were utilized to allow users to interact with the surroundings. Although these related applications supported a total sense of immersion, they did not perform as well as virtual museums in AR because of a lack of interactivity.

In addition to VR exhibitions, visitors can enhance their experience by viewing and engaging with museum collections in an augmented reality environment [10]. For example, in [14], researchers implemented a framework to represent the Parion Theater in Biga, Turkey, with the help of photogrammetry and AR methods. When users viewed an exhibition with their smart device, the app could detect the marker and show the desired format. Qian et al. [15] employed the mobile augmented reality (MAR) technique to design museum education content. Visitors could interact directly with the museum exhibits in detail by rotating, zooming in, and moving about. This could promote visitors’ understanding of the cultural ideas of the museum exhibition. Although these related applications supported visitors in interacting with museum collections, most of them lacked effective supporting museum guidance and a high level of immersion.

MR represents a fusion of VR, AR, and the real environment, thus creating a blend of the real and virtual environments [16,17,18]. It combines the merits of VR and AR [19]. For example, MR technology was employed in a museum environment in the MuseumEye project [20]. This study amalgamated the physical and virtual museum environment to create an engaging and immersive museum with the help of the MR device and enhanced visitors’ experience through interactive gaming, learning, and museum guidance [21]. Despite the technical breakthroughs of the research, there were still limitations, such as restricted social interaction and shared experiences among multiple users.

Finally, the metaverse is the most recent approach [22]. Since the scope of the metaverse is wide and still growing, many definitions and similar conceptions exist [23]. Xanthopoulou et al. [24] defined the metaverse as a 3D extension of the traditional digital space that hosts massive multiplayer online role-playing games (MMORPGs). Huggett [25] introduced the metaverse as a world in which social virtual environments combined immersive VR with physical people, objects, and networks in a futuristic form. The metaverse was described by Siyaev et al. [26] as a wonderful MR digital space in the physical world in which people can interact and gather. Therefore, we were motivated to implement a virtual platform and environment that combine the following features: engaging visitors with an immersive experience, providing multimedia museum guidance, allowing interaction with the virtual world and objects, and enabling communication among multiple players in different geographic locations. Table 1 presents a comparative analysis of related research that employs various modern techniques in the museum experience. The table also includes the prototype of our work and demonstrates that, compared with other projects, our platform provides what visitors desire.

We compare various projects based on the following key characteristics in Table 1:

-

(1)

Technique: the technology used, including Web 3D, desktop VR, immersive VR, AR, MR, and metaverse.

-

(2)

Mobility: whether users can move with freedom while enjoying the museum experience. For the cases utilizing Web 3D or desktop VR [1, 8, 9], users need to use the monitor and mouse to view and interact with the virtual museum, so they cannot move around, so we mark them as “No”. For an immersive experience in the projects that use the immersive VR technique, in most cases, users need to wear an HMD that connects to the computer via a cable of limited length. Furthermore, because of the various tracking methods, users of HTC Vive [11] and Oculus Rift [13] are allowed to move within a confined space. As a result, we mark these as “Limited”. In contrast, users of the MR device HoloLens [20] and our platform (Oculus Quest 2) can move with more freedom. Therefore, we mark them as “Yes”. The users of projects in [14] and [15] can move around with a mobile device or handheld device to enjoy the museum experience, so we mark them as “Yes” as well.

-

(3)

Sense of immersion: according to [27], immersion in terms of VR is understood in various ways. In [7, 10, and 28], there are two levels of immersion. The first is achieved with the two-dimensional monitor screen as the window to the virtual environment and the keyboard and mouse as ways to interact, namely, desktop VR. The second is realized by utilizing HMDs that block the users’ real-life view to create an immersive experience and controllers. In [29], the degrees of immersion are related to three types of VR systems: nonimmersive systems, which use desktops; immersive systems, which employ a few sensory output devices, such as HMDs; and semi-immersive systems, which are between the above two. As a result, in our work, we mark the projects of [1, 8 and 9], which employ Web 3D or desktop VR, as “Limited”, and we mark the projects of [11 and 13], which use HMDs to provide a fully simulated experience, as “Yes”. As mentioned in [27], immersion is related to VR, while AR does not block users’ view to enable an immersive experience, so we mark the projects in [14 and 15] as “No”. Similarly, the project in [20] uses an optical-see-through HMD to overlay the virtual world, which we regard as “Limited” when compared to a non-see-through HMD. Our system provides totally immersive participation with a non-see-through HMD. Therefore, we mark it as “Yes”.

-

(4)

Museum guidance: whether the system provides users with verbal or nonverbal instructions and knowledge to assist them in the museum [30]. According to [20], museum guidance is an organized scenario with the ability to attract, please, inform and satisfy visitors with the necessary information in a sensible path. Moreover, museum guidance should involve at least four aspects: first, lead visitors along a predefined route; second, provide visitors with information about the site; third, ensure that there is active and helpful interaction; and fourth, educate visitors and feed them information rather than only disseminating knowledge [20]. Because the projects in [1, 9, 14, 15, 20] and our work provide visitors with well-designed museum guidance, we mark them as “Yes”, whereas other projects are marked as "Limited."

-

(5)

Interaction with virtual world and objects: In the projects in [1, 8, and 9], visitors can only use the mouse to click specific objects, so we mark them as “Limited”, while for the projects in [11, 13, and 20], users can “touch” or directly manipulate corresponding objects in the virtual world; when users turn their heads or move their bodies, the views change accordingly. As a result, we mark these as “Yes”. For the projects in [14 and 15], users can directly interact with the virtual models, such as rotating a 3D model and zooming in and out. Therefore, we also mark them as “Yes”.

-

(6)

Communication between geographically distributed users: whether the system provides users with the function of verbal communication when they are in different locations.

Given the high value of the chime bells of Marquis Yi of Zeng and the prohibition on the exhibition traveling abroad, one of the goals of our research was to improve recognition of the chime bells, disseminate cultural heritage, and present solutions for similar cultural heritage sites around the world. Furthermore, little work has been done to create virtual environments for cultural heritage sites, such as the chime bells of Marquis Yi of Zeng, to engage visitors with immersive experiences, provide multimedia guidance, and encourage visitors to interact with the chime bells, perform music with the chime bells, and communicate with other visitors even though they are geographically separated. As a result, our research fills the gaps and realizes a platform using the metaverse technique. Finally, our research establishes a comprehensive and efficient workflow for creating realistic virtual heritage sites.

Materials and methods

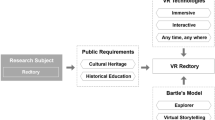

Description of the framework

Techniques of laser scanning and photogrammetry, as well as tools of 3Ds Max, Maya, ZBrush, Substance Painter, and Unity engine, were used to generate a realistic and attractive virtual environment for the metaverse platform. Since the essential hardware of metaverse is an HMD, which enables an immersive experience by blocking the users’ view [23], the users need to wear an HMD to enjoy the experience. Additionally, they can control their experience with the operation of the controller. In particular, they can communicate with each other through verbal communication in multiplayer mode, which is supported by the distributed virtual reality (DVR) technique. The framework is shown in Fig. 1.

Data acquisition, processing, and modeling

Digital data acquisition of cultural heritage is divided into two categories: 2D and 3D. 2D data acquisition refers to digitization and computer archiving of text, images, and other media. 3D data acquisition refers to the 3D point-cloud model of exhibits obtained using scanning [31] as well as audio and video recordings of cultural relics. 2D texture: In our platform, 2D data include images of the chime bells of Marquis Yi of Zeng and other exhibits as well as images of the scene and space so that visitors can view and interact with the exhibits, walk through them, and so on. In the latter stage, Photoshop software was utilized to process the original pictures into textures [32]. Then, the textures were applied to the models to make them more realistic. 3D point cloud: 3D laser scanning is a method used to capture exhibit shapes and represent them digitally in 3D on a computer [33]. This works based on the laser triangulation principle. The 3D scanner was used to obtain 3D data of the target by scanning it with a laser beam. The laser beam is first emitted and then reflects information about the surfaces of objects. Finally, the processing units calculate to obtain the 3D point cloud (x, y, z) for the target. In addition, according to [34], an alternative method to laser scanning is the photogrammetry technique, through which photos are taken, the positions of the camera are then calculated, and a point cloud is finally generated. The possible merits of photogrammetry are that it is (1) economical and practical, avoiding the use of costly scanning devices; (2) friendly to users without much experience; and (3) professional, generating accurate point-cloud models based on photos.

Since there were a variety of noise points in the original point cloud and the unprocessed point cloud was disordered, we needed to process the point-cloud data (registration, denoising, simplification) before it was ready for 3D modeling. Then, we extracted the contours of the point cloud to reconstruct the geometric model with 3Ds max, Maya software. The overall procedure is shown in Fig. 2.

Baking and texturing with the PBR workflow

The realistic model that we reconstructed with 3Ds max, Maya software is a low-polygon model with thousands of faces. This model depicts the approximate shape of the objects, and the accuracy is relatively low due to the limitations on the number of faces. However, it lays the foundation for the high-polygon model. We used the sculpting application ZBrush to sculpt the mesh effectively. Tools such as ZBrush are quite popular because they can create highly detailed meshes that were not previously possible [34]. Through this approach, we obtained a high-polygon model with millions of faces and details that are almost perfect. However, the large mesh size has a great impact on the software. As a result, the running speed falls, which leads to an unserviceable experience [7].

How could we reduce the number of polygons in a model and yet keep the surface details of the high-polygon model? The solution was to process the high-polygon model with the PBR (physically based rendering) workflow. PBR is a rendering technique that follows the laws of physics and simulates light and material interaction as in the real world [35]. The procedure is shown in Fig. 3. The PBR workflow employs textures to modify the normal of objects, creating an illusion of raised and recessed areas that are not in the low-polygon mesh [7]. It is very helpful in reducing the number of polygons in a model while displaying the details of a high-polygon mesh.

The PBR workflow is as follows:

-

1.

The base model (low-polygon model) was prepared with 3Ds max and Maya software.

-

2.

The low-polygon model was sculpted in ZBrush software, making it a high-polygon model.

-

3.

UV-unwrapping techniques were utilized for the models.

-

4.

The low-polygon model and high-polygon model were imported into Substance Painter software (SP) for baking and texturing: the surface details of high-polygon models can be baked onto the low-polygon mesh to allow for the representation of details of a high-polygon mesh without increasing the geometrical complexity while remaining simplified and low-polygon meshes [36].

-

5.

Textures were made with SP software.

-

6.

Finally, the models and textures were imported into the Unity engine for further adjustment.

Optimization with the LOD technique

Since the model complexity, which is measured by the number of polygons, increases more rapidly than the ability of our hardware, we needed to process these models with further optimization to minimize the workload and improve the performance of real-time rendering. The tension between highly detailed, realistic models and smooth performance haunted us. The LOD (level of detail) technique was used to address this issue without affecting the level of fidelity of the models. Different levels of the models are built with progressively lower polygonal resolution, and the less detailed model is more quickly rendered than the one before [37]. In our case, different parts of the virtual environment (walls, ceilings, and wall decorations) were built accordingly [36]. For example, LOD0 was the original mesh, and each additional LOD was a reduction of the previous one, with fewer polygon counts. When rendering, we made use of a less detailed model for small or distant parts of the environment to reduce the rendering cost of the parts with simplified geometry [37].

Design of system interaction

Natural interaction is a significant precondition for improving the sense of immersion in the metaverse [23]. In this work, the various methods of interaction are shown in Table 2.

We configured the system and installed the clients on the computers in our laboratory's VR (MR) rooms so that users could experience the metaverse platform.

Roaming in the virtual scene

Regarding roaming in the virtual scene, two modes were provided for users: the autonomous mode and the manual mode. In the autonomous mode, users followed the camera’s perspective and moved along the preset path. This option was useful for first-time users and people who were not used to the controller operation. However, the disadvantage was that the users could not stop the tour at random before finishing it. In contrast, in the manual mode, users could decide the direction of travel and choose the route with the teleportation function in the controller. Additionally, when the user turned his or her head, the perspective also changed as if they were in the real museum.

Interaction with virtual objects for multimedia information

For every exbibit, there was text information, 3D models, and audio explanation to help users learn more about the history, as shown in Fig. 4. The 3D model and audio explanation could be obtained through interaction with the menu. The users could also try to “grab” and “handle” the 3D model of exhibits. In our case, if the exhibit was identified as “can-be-grabbed”, the user could grab it for a detailed view through the operation of the controller, as shown in Fig. 5. In particular, users were encouraged to grab a mallet to knock and ring the chime bells. For users who were not familiar with rhythm, the chime bell to knock next was highlighted to show a clue, as shown in Fig. 6. As a result, they could play a song with chime bells without any doubts about the order. The audio explanation explained the origin, history, and features of the exhibits.

There was also an extra resource of videos about the chime bells of Marquis Yi of Zeng. Users could view them in the immersive environment through the operation of the controller.

Multiplayer mode

The multiplayer mode is an important part of the platform. We utilized DVR technology to realize the collaboration and communication of multiple players in the metaverse environment. DVR means combining the network technique and VR technique and is also known as collaborative virtual environment (CVE) or multiuser virtual environment (MVE) [38].

The greatest challenge in architecture design is spatiotemporal consistency. The nodes of computation conflict with collaboration in the virtual world, which results in a number of scenes that do not correspond to reality. This impacts the authenticity and realism of the simulation. For example, the information may be out of sync due to variations in the performance of client hardware, and the issue of collision detection resulted from the movement of user-controlled entities in the virtual environment [39].

We proposed a hybrid collaborative architecture to solve the problems, as shown in Fig. 7. The client addresses the model visualization, user interaction, collision detection, verbal communication with other users, and data transmission from the server, while the server addresses user management, data management, and data storage. The advantage of this model is the small amount of data transmitted and the quick response. If there are multiple players in the virtual scene, the server synchronizes messages of collision, location, and communication based on data delivered by clients and then sends the updated messages to every client [39]. In our case, each user is assigned an avatar in the virtual environment. They are subject to the user’s control, indicating the user’s position and orientation. For example, as the user walks in the physical.

Environment, the avatar walks in the virtual space since the user’s movements in the physical world are synchronized automatically [40]. For multiple players located in different physical spaces, their avatars can share the same virtual environment, and the players can communicate verbally. They can talk with each other by recording their speech with microphones in the HMDs through the corresponding clients, and the recorded clips are then converted and compressed; the clients then send the recorded data to the server, and the server distributes the recorded data to every client. After clients receive the recorded data forwarded by the server, they decompress the recorded data, convert them to a playable format, and play them through their HMDs. The server in our realization is Photon Server. It is able to support multiple players’ interactions in real time within the same virtual space [41]. It receives data from various clients and synchronizes the data with those of other clients. After clients receive information from the server, it synchronizes the information and sends the data to the virtual scenes and avatars after computation. The synchronization of avatar data is shown in Fig. 8.

Results and discussion

The system runs on a workstation connected to Oculus Quest2. A few sample screenshots are presented. Figure 9(a) shows a screenshot of the main hall, whereas Fig. 9(b) presents the exhibition hall of digital music. Figure 9(c) shows the exhibition hall of history, while Fig. 9(d) presents the interactive experience hall of chime bells. At the bottom of Fig. 9, the footprints that guide visitors are shown on the left, while the teleportation points are presented on the right.

To evaluate the system performance and user satisfaction, 652 visitors were invited to take part in the survey session. A total of 615 valid surveys were returned. The questions in the survey are listed in Table 3. The survey questions were developed according to previous studies. In [42], the authors were inspired by the QoE model categories outlined in [43] by Raake et al. and built questionnaires regarding aspects such as enjoyment, immersion, interaction, and discomfort. We retained enjoyment, immersion, and interaction and abandoned the construct of discomfort, referred to as “device discomfort”, because the feeling of discomfort is caused mainly by the device HMD device rather than our system, and the purpose of the survey was to learn about users’ attitudes toward our system. In addition, in [42], the authors used the VR HMD of Oculus Rift, which is heavy, while in our research, we chose Oculus Quest2, which is lightweight, up-to-date, and brings users a better experience. The other reason we agreed with the constructs of immersion, interaction, and enjoyment is that, according to [23], based on immersive interaction, the metaverse expands with various social meanings (for example, fashion, event, game, education, and office). As a result, immersion and interaction are essential attributes of the metaverse. Additionally, Mystakidis mentioned in [44] that the metaverse asks for technologies from VR, AR, and MR. Immersion and interaction are essential characteristics of the VR experience, as [16] noted, while AR emphasizes interaction between users and virtual objects, according to Azuma et al. [45]. As a result, in our metaverse system, we wanted to know how users felt about the immersion and interaction provided by our platform. In addition, since our metaverse platform was made for cultural heritage/exhibitions/museums, it also had an entertainment function, which was why we also considered the construct of enjoyment.

The additional reasons that we selected these three specific questions for enjoyment are that Jaynes et al. [46] defined the metaverse as an immersive environment in the form of shared digital media, and it can remove the constraints of time and space by deceiving users’ visual sense. In addition, according to [16, 19, and 25], MR/the metaverse aims to build an immersive virtual world that is perceived mainly by users’ eyes. As a result, the sense of vision accounts for the vast majority of all the senses that comprise MR/the metaverse. In [47], the authors mentioned that to provide users with a sense of visual immersion, high-resolution images are needed. As a result, we had to determine how users perceived the graphics in terms of whether they were pleasing. That was why we selected Enjoyment 1 for enjoyment. In addition, for the latter part of the questionnaire, we designed the open-ended question “How do you perceive the experience provided by our system regarding the graphics, audio, and other senses that comprise the metaverse?”.

According to [1] and [3], virtual museums are used as a way to alleviate the constraints of traditional museums. As we mentioned earlier, virtual museums actually bring much convenience and are a viable alternative for displaying, interpreting, and promoting collections. In addition to these advantages, we needed to know whether our metaverse platform was enjoyable, especially compared to a traditional experience, as a museum's entertainment goal is essential to its survival. This is why we selected Enjoyment 2 for enjoyment.

Aside from the above two aspects, it was necessary for us to investigate users’ level of enjoyment in the overall experience. Then, Enjoyment 3, which we referred to in [32], was added to evaluate users’ enjoyment of the overall experience in the metaverse platform.

We selected three specific questions for immersion for the following reasons. According to Radianti et al. [27], different views exist on the concept of immersion. Some researchers have argued that immersion should be regarded as a technological attribute that can be assessed objectively, as Slater and Wilbur noted [48]. As a result, we can describe immersion as the extent to which computer displays can create a broad and vivid illusion of reality, as noted by Slater and Wilbur [48]. This is why we selected Immersion 2, which describes the accuracy of display and resolution, for immersion.

However, other researchers have suggested that immersion is a personal, individual belief, i.e., a psychological phenomenon, as in Witmer and Singer [49]. From this perspective, immersion is a psychological state in which users experience sensory isolation from reality. This is why we selected Immersion 1, which describes the users’ subjective feelings, for immersion.

The presence theory is included in immersion theory, as Hammady argued [20]. Furthermore, the idea of presence is important in MR/the metaverse system, as the concept of presence is used to augment the user’s cognitive perception in order to create a sense of a different physical location and time period, which differs from the conception of immersion according to Patrick et al. [50]. Consequently, we selected Immersion 3, which describes the sense of the presence of users, for immersion.

We selected three specific questions for interaction. As Owens et al. explained [51], in the metaverse, users can interact with the environment and each other. Therefore, we needed to determine how users would interact with the environment. That is why we selected Interaction 2 for interaction. Since avatars represent all the users in the metaverse platform, controlling the avatar is the premise for further user interaction, which was why we selected Interaction 3 for Interaction.

In [47], the authors explained that to ensure that the system performs well in creating an immersive metaverse experience [23], an HMD and physical auxiliary devices are needed. As a result, we needed to evaluate whether users’ interaction with devices, such as the HMD and controllers, was easy. That was why we selected Interaction 1, which describes the general interaction with our system, for interaction.

As Fig. 10 shows, levels of enjoyment were investigated with Enjoyment 1, Enjoyment 2, and Enjoyment 3. The results show that visitors generally agreed that the graphics in the system were pleasing (91%); the experience in the metaverse platform was more enjoyable than that in a traditional museum (85%); and this virtual experience was attractive to them (93%). Immersion 1, Immersion 2, and Immersion 3 provided an overview of immersion. The results reveal that the majority of the visitors felt that they were immersed in the experience (90%); the virtual space in the system was real (87%), and they felt a sense of presence in the experience (89%). Interaction 1, Interaction 2, and Interaction 3 evaluated the results for interaction. The results reveal that most visitors felt that the system was easy to use (90%); the way of interacting with the virtual environment was natural (83%); and the avatar was easy to control (88%).

We added some open-ended questions and invited 46 participants to experience our system to obtain more in-depth answers.

-

Q1.

What do you think of the experience provided by our system in terms of graphics, audio, and other senses that comprise the metaverse?

-

Q2.

What are the strengths and weaknesses of the experience provided by our system compared to the traditional museum in reality?

-

Q3.

Did you leave the virtual museum with a deep impression of the chime bells of Marquis Yi of Zeng that you enjoyed through the metaverse platform? If yes, please describe the impression and explain why you were impressed.

In terms of the results for Q1, the visitors were generally satisfied with the audiovisual experience provided by the metaverse platform. Some visitors insisted that the high-resolution images and sound made them feel as if they were actually “there”. Furthermore, a few visitors believed that the frame rate and display resolution were significantly higher than expected. A certain number of participants indicated that it was exciting to interact with the chime bells of Marquis Yi of Zeng and hear their delightful music. However, other participants reported that there were some surrounding noises when communicating with other users in the metaverse platform, and they anticipated an improvement in the user experience of verbal communication.

Regarding the results for Q2, the strengths noted by the visitors were as follows: (1). Compared to visiting a traditional museum, a tour via the metaverse platform was more flexible regarding time and space, and they actually did not need to visit the real museum. (2). They could “touch” or even “pick up” the exhibits rather than being separated by glass cases and viewing them only from a distance. In addition, they could interact with the chime bells of Marquis Yi of Zeng to play music. There is enough space for more collections in the virtual platform. Several weaknesses were identified as well. (1). They were unfamiliar with the operation of the controllers since they were new to VR/MR/the metaverse. (2). Some visitors believed that the metaverse lacked the atmosphere of a real museum, which has more activities and events to attend.

Regarding Q3, the visit to the metaverse platform impressed most of the visitors, accounting for 85% of the participants. A few visitors agreed that the detailed texture of the exhibits made them look “real”. A number of participants were impressed by interactions with various exhibits, especially the chime bells of Marquis Yi of Zeng. They became curious about the metaverse platform and hoped that there would be more ways to interact with the exhibits and virtual world. Additionally, some visitors had a strong impression of the multimedia tour guide due to its effectiveness.

The results of the user survey reveal that first, from the perspective of visitors, our metaverse platform has high potential to enhance visitors’ experience in the museum and could be regarded as a positive addition to the cultural heritage sector. The system allows users to “touch” or “manipulate” the chime bells and other exhibits while browsing, which never happens in real museums. Multimedia tour guidance provided users with the freedom to explore unknown exhibits without a human guide. The popularity of our platform may lead to the replacement of human tour guides in real museums [20]. In addition, our system encourages visitors to experience cultural heritage without the constraints of time and space. Visitors do not need to rush to traditional museums at a specific time. Second, from the standpoint of museums, our platform attracts more visitors while decreasing the number of visitors to traditional museums, relieving the pressure on traditional museums, especially when the number of visitors exceeds the upper bound [9]. Moreover, with our platform, all the exhibits are displayed and stored digitally. There is no need to manage the physical exhibits, no need for enough physical storage space to save the physical exhibits, and no need for special guards. In this manner, significant amounts of space and resources can be saved. Third, from the standpoint of the general public, our platform is promising if extended to serve educational objectives in addition to museological goals. The platform could be adapted to include more educational content to raise the general public’s awareness and motivate people’s interest in learning more about history and cultural heritage, as they indicated much curiosity about our system. Fourth, from the perspective of researchers and supporting organizations, our platform serves as an example to demonstrate how digital technology, such as the metaverse technique, can be a possible approach to promote the sustainability of cultural heritage. However, to protect and save cultural heritage, interdisciplinary research between humanities and engineering disciplines is required [52]. Our work is also meaningful in that it inspires researchers to consider utilizing metaverse technology to save more cultural heritage.

Conclusions and future study

In this work, the cultural content and value of the chime bells of Marquis Yi of Zeng were introduced as background information, and a metaverse platform for traditional culture, the chime bells of Marquis Yi of Zeng, was proposed.

This research makes the following three main contributions.

We visualized the chime bells of Marquis Yi of Zeng, other exhibits, and the exhibition scene with laser scanning and digital photogrammetry techniques. We built 3D models and optimized the models with the PBR workflow for the sake of improved effects and efficiency. We used LOD for several elements to maintain consistent detail and reduce the rendering costs. We developed a roaming system for the scenes, allowed for the interaction of users with the exhibits for multimedia information, and provided other ways of interaction to connect visitors and exhibits and help with orientation and interactivity. To improve the engine's graphics performance, the HDRP technique was employed. We also provided a multiplayer mode to encourage multiple visitors to communicate and share experiences within the same space, making them feel as if they were together even when geographically distributed.

In the future, we aim to enrich the features and content of this social ecosystem while also reinforcing its social meanings, such as by adding more events to attend and new ways to interact. We will add a few theme activities, such as porcelain-making and lantern-making, to our metaverse platform. These activities will help people learn more about the exhibits and involve much more collaboration among users. For example, in lantern-making, the first step is building the skeleton of the lantern, and the second step is pasting pieces of colorful paper to the lantern skeleton. The last step is to insert the wick into the lantern. Then, the lantern begins to glow. Visitors can work on different steps at the same time to collaborate efficiently. In addition, we plan to extend the offerings of our platform to other research areas, such as gaming and education. Furthermore, we intend to test the system on our campus to ensure that users in various locations can enjoy the metaverse experience and improve the user experience of verbal communication in the future version.

Availability of data and materials

The data used in this article are available upon request to the authors.

Abbreviations

- 3D:

-

Three-dimensional

- VR:

-

Virtual reality

- AR:

-

Augmented reality

- MR:

-

Mixed reality

- HMD:

-

Head-mounted display

- PBR:

-

Physically based rendering

- LOD:

-

Level of detail

- HDRP:

-

High-definition render pipeline

References

Wang D. Exploring a narrative-based framework for historical exhibits combining JanusVR with photometric stereo. Neural Comput Appl. 2018;29(5):1425–32.

Wu L, Su W, Ye S, and Yu R. Digital Museum for Traditional Culture Showcase and Interactive Experience Based on Virtual Reality In IEEE International Conference on Advances in Electrical Engineering and Computer Applications (AEECA). 2021 218–223.

Tsichritzis D, Gibbs S. Virtual museums and virtual realities. In: International Conference on Hypermedia and Interactivity in Museums 1991 17–25.

Kiourt C, Koutsoudis A, Pavlidis G. DynaMus: a fully dynamic 3D virtual museum framework. J Cult Herit. 2016;22:984–91.

Ioannides M, Magnenat-Thalmann N, Papagiannakis G. Mixed reality and gamification for cultural heritage. Berlin,: Springer; 2017.

Barbieri L, Bruno F, Muzzupappa M. Virtual museum system evaluation through user studies. J Cult Herit. 2017;26:101–8.

Carvajal DAL, Morita MM, Bilmes GM. virtual museums captured reality and 3d modeling. J Cult Herit. 2020;45(234):239.

Pavlidis G, Tsiafakis D, Provopoulos G, Chatzopoulos S, Arnaoutoglou F, and Chamzas C. MOMI: a dynamic and internet-based 3d virtual museum of musical instruments. In Third International Conference of Museology 2006.

Li L, Yu N. Key technology of virtual roaming system in the museum of ancient high-imitative calligraphy and paintings. IEEE Access. 2020;8:151072–86.

Styliani S, Fotis L, Kostas K, Petros P. Virtual museums, a survey and some issues for consideration. J Cult Herit. 2009;10:520–8.

See ZS, Santano D, Sansom M, Fong CH, and Thwaites H. Tomb of a Sultan: a VR Digital Heritage Approach. In: Third digital heritage international congress (Digital HERITAGE) held jointly with 24th International Conference on Virtual System & Multimedia (VSMM 2018), 2018.

Hajirasouli A, Banihashemi S, Kumarasuriyar A, Talebi S, Tabadkani A. Virtual reality-based digitisation for endangered heritage sites: theoretical framework and application. J Cult Herit. 2021;49:140–51.

Fernández-Palacios BJ, Morabito D, Remondino F. Access to complex reality-based 3D models using virtual reality solutions. J Cult Herit. 2017;23:40–8.

Özer DG, Nagakura T, Vlavianos N. Augmented reality (AR) of historic environments: representation of Parion Theater, Biga Turkey. J Fac Archit. 2016;13(2):185–93.

Qian J, Cheng J, Zeng Y. Design and implementation of museum educational content based on mobile augmented reality. Comput Syst Sci Eng. 2021;36(1):157–73.

Bekele MK, Pierdicca R, Frontoni E, Malinverni ES, Gain J. A survey of augmented, virtual, and mixed reality for cultural heritage. ACM J Computing Cult Herit. 2018;11(2):1–36.

Eswaran M, Gulivindala AK, Inkulu AK, Bahubalendruni MVAR. Augmented reality-based guidance in product assembly and maintenance/repair perspective a state of the art review on challenges and opportunities. Expert Syst With Appl. 2023;213:118983.

Eswaran M, Bahubalendruni MVAR. Challenges and opportunities on AR/VR technologies for manufacturing systems in the context of industry 4 0 a state of the art review. J Manuf Systs. 2022;65:260–78.

Kounlaxay K, Kim SY. Design of learning media in mixed reality for Lao education. Comput Mater Contin. 2020;64(1):161–80.

Hammady R, Ma M, Strathern C, Mohamad M. Design and development of a spatial mixed reality touring guide to the Egyptian Museum. Multimed Tools Appl. 2020;79(5–6):3465–94.

Hammady R, Ma M, AL-Kalha Z, Strathern C. A framework for constructing and evaluating the role of MR as a holographic virtual guide in museums. Virtual Real. 2021;25:895–918.

Baszucki D. The Metaverse Is coming. https://www.wired.co.uk/article/metaverse (Accessed on 23 February 2022).

Park SM, Kim YG. A metaverse taxonomy components applications and open challenges. IEEE Access. 2022;10:4209–51.

Xanthopoulou D, Papagiannidis S. Play online, work better? Examining the spillover of active learning and transformational leadership. Technol Forecast Soc Change. 2021;79(7):1328–39.

Huggett J. Virtually real or really virtual: towards a heritage metaverse. Studies Digit Herit. 2020;4(1):1–5.

Siyaev A, Jo GS. Towards aircraft maintenance metaverse using speech interactions with virtual objects in mixed reality. Sensors. 2021;21(6):2066.

Radianti J, Majchrzak TA, Fromm J, Wohlgenannt I. A systematic review of immersive virtual reality applications for higher education design elements lessons learned, and research agenda. Comput Edu. 2020;147:103778.

Kaplan-Rakowski R, Gruber A. Low-immersion versus high-immersion virtual reality: definitions, classification, and examples with a foreign Language Focus. In Proceedings of the Innovation in Language Learning International Conference 2019.

Cipresso P, Giglioli IAC, Rava MA, Riva G. The past, present, and future of virtual and augmented reality research: a network and cluster analysis of the literature. Front Psychol. 2018;9:2086.

Fine EC, Speer JH. Tour guide performances as sight sacralization. Ann Tour Res. 1985;12(1):73–95.

Li F, Zhao H. 3D Real Scene Data Collection of Cultural Relics and Historical Sites Based on Digital Image Processing. Computational Intelligence Neuroscience 9471720.

Hua L, Chen C, Fang H, Wang X. 3D documentation on Chinese hakka tulou and internet-based virtual experience for cultural tourism: a case study of Yongding county Fujian. J Cult Herit. 2018;29:173–9.

Trebuňa P, Mizerák M, Rosocha L. 3D Scanning-technology and reconstruction. Int Sci J About Simul. 2018;4(3):1–6.

Frank F, Unver E, Benincasa-Sharman C. Digital sculpting for historical representation: neville tomb case study. Digital Creat. 2017;28(2):123–40.

Pharr M, Jakob W, Humphreys G. Physically based rendering: from theory to implementation. 3rd ed. Burlington: Morgan Kaufmann; 2016.

Ferdani D, Fanini B, Piccioli MC, Carboni F, Vigliarolo P. 3D reconstruction and validation of historical background for immersive VR applications and games: the case study of the forum of augustus in Rome. J Cult Herit. 2020;43:129–43.

Luebke D, Reddy M, Cohen JD, Varshney A, Watson B, Huebner R. Level of detail for 3D graphics. Burlington: Morgan Kaufmann; 2003.

You Z, Huang J, Xue J, Chen J, Liu J, Yu Q, Hu H. A multiplayer virtual intelligent system based on distributed virtual reality. Int J Pattern Recognit Artif Intell. 2021;35(14):2159050.

Kan. K. Application of virtual reality in continuous casting training system. M.S. thesis Department of Control Engineering North China University of Science and Technology. Tangshan China. 2019.

Sra M, Garrido-Jurado S, Maes P. Oasis: procedurally generated social virtual spaces from 3D scanned real spaces. IEEE Trans Visual Comput Graph. 2018;24(12):3174–87.

Liang H, Chang J, Deng S, Chen C, Tong R, Zhang J. Exploitation of multiplayer interaction and development of virtual puppetry storytelling using gesture control and stereoscopic devices. Comput Anim Virtual Worlds. 2016;28(5):1–19.

Keighrey C, Flynn R, Murray S, Murray N. A physiology-based QOE comparison of interactive augmented reality, virtual reality and table-based applications. IEEE Trans Multimed. 2020;23:333–41.

Raake A, Egger S. Quality and quality of experience in quality of experience advanced concepts applications and methods. Berlin: Springer; 2014.

Mystakidis S. Metaverse. Encyclopedia. 2022;2(1):486–97.

Azuma R, Baillot Y, Behringer R, Feiner S, Julier S, MacIntyre B. Recent advances in augmented reality. IEEE Comput Graphics Appl. 2001;21(6):34–47.

Jaynes C, Seales WB, Calvert K, Fei Z, and Griffioen J. The metaverse: A networked collection of inexperience, self-configuring, immersive environment. In Proceedings of the workshop on Virtual Environment. (EGVE) 2003:115–124.

Choi HS, Kim SH. A content service deployment plan for metaverse museum exhibitions—centering on the combination of beacons and HMDs. Int J Inf Manage. 2017;37(1):1519–27.

Slater M, Wilbur S. A Framework for immersive virtual environments (FIVE) speculations on the role of presence in virtual environments. Presence Teleoperators Virtual Environ. 1997;6(6):603–16.

Witmer BG, Singer MJ. Measuring presence in virtual environments a presence questionnaire presence teleoperators and virtual environments. Presence. 1998;7(3):225–40.

Patrick E, Cosgrove D, Slavkovic A, Rode JA and Verratti T. 2000 Using a large projection screen as an alternative to head-mounted displays for virtual environments. In Proceedings of the SIGCHI conference on human factors in computing systems 478–485.

Owens D, Mitchell A, Khazanchi D, Zigurs I. An empirical investigation of virtual world projects and metaverse technology capabilities. ACM SIGMIS Database Adv Inform Sys. 2011;42(1):74–101.

Kim S, Im D-u, Lee J, Choi H. Utility of digital technologies for the sustainability of intangible cultural heritage (ICH) in Korea. Sustainability. 2019;11(21):6117.

Acknowledgements

This research was supported by Major Research Projects of Philosophy and Social Science in Institutions of Higher Education in Hubei (19ZD008).

Funding

The authors declare that they received no financial support for the research and/or authorship of this paper.

Author information

Authors and Affiliations

Contributions

LW wrote the article, performed the state-of-the-art research, and developed the models; WS contributed to the software development; SY built the 3D models; RY designed the entire project, supervised the project, and revised the paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wu, L., Yu, R., Su, W. et al. Design and implementation of a metaverse platform for traditional culture: the chime bells of Marquis Yi of Zeng. Herit Sci 10, 193 (2022). https://doi.org/10.1186/s40494-022-00828-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40494-022-00828-w