- Research

- Open access

- Published:

2D and 3D representation of objects in architectural and heritage studies: in search of gaze pattern similarities

Heritage Science volume 10, Article number: 86 (2022)

Abstract

The idea of combining an eye tracker and VR goggles has opened up new research perspectives as far as studying cultural heritage is concerned, but has also made it necessary to reinvestigate the validity of more basic eye-tracking research done using flat stimuli. Our intention was to investigate the extent to which the flattening of stimuli in the 2D experiment affects the obtained results. Therefore an experiment was conducted using an eye tracker connected to virtual reality glasses and 3D stimuli, which were a spherical extension of the 2D stimuli used in the 2018 research done using a stationary eye tracker accompanied by a computer screen. The subject of the research was the so-called tunnel church effect, which stems from the belief that medieval builders deliberately lengthened the naves of their cathedrals to enhance the role of the altar. The study compares eye tracking data obtained from viewing three 3D and three 2D models of the same interior with changed proportions: the number of observers, the number of fixations and their average duration, time of looking at individual zones. Although the participants were allowed to look around freely in the VR, most of them still performed about 70–75% fixation in the area that was presented in the flat stimuli in the previous study. We deemed it particularly important to compare the perception of the areas that had been presented in 2D and that had evoked very much or very little interest: the presbytery, vaults, and floors. The results indicate that, although using VR allows for a more realistic and credible research situation, architects, art historians, archaeologists and conservators can, under certain conditions, continue to apply under-screen eye trackers in their research. The paper points out the consequences of simplifying the research scenario, e.g. a significant change in fixation duration. The analysis of the results shows that the data obtained by means of VR are more regular and homogeneous.

Graphical Abstract

Introduction

Scientific fascination with art and the desire to understand an aesthetic experience provided an opportunity to discover many facts about visual processing [1, 2]. The use of biometric tools to diagnose how people perceive art has led to the creation of neuroaesthetics [3, 4]. One of such tools is an eye-tracker (ET), which allows one to register an individual gaze path by use of fixations (pauses lasting from 66 is 416 ms [5]) and saccades (attention shifts between one point of regard and another [6, 7]). Assigning such visual behaviors to predefined Areas of Interest—AOIs—makes it possible to analyze numerous parameters. For example, for each such area one can calculate the number of people who looked at it (visitors), the time they spent doing so (total visit duration) or fixations number recorded within it. It is also possible to determine precisely when a given area was initially examined (time to first fixation) and how long a single fixation lasted on average (average fixation duration).

Application of eye trackers has allowed researchers to get a closer look at the issue described by numerous sociologists, urban planners, historians of art, conservators, and architects of the past. We distinguish four environments for conducting ET research. Stationary devices enable the study of images displayed on the screen, e.g. photos presented to tourists [8]. Mobile ET may assist in conducting research in the natural environment [9, 10]. Through the combination of mobile ET and other methods, advanced analyses of architectural and urban space can be conducted [11]. Recent technological advances have allowed ET to be combined with augmented reality (AR) [12]. The fourth type, the combination of an eye tracker and VR goggles has already opened up several new research perspectives. It is possible, for example, to analyze the perception of reconstructed historical spaces [13, 14]. This new research environment has made it necessary to clarify any unclear points, especially those concerning validity of prior research done on three-dimensional objects presented as flat images. A visualization displayed on a screen as a flat image is a considerable simplification of the same object seen in reality or presented in the form of an omnidirectional contents [15]. While eye-tracking research on paintings [16] or pictures displayed on a screen [17, 18] provokes numerous controversies[19], similar studies may be even more questionable if 3D objects—such as sculptures, landscapes or buildings—are presented as 2D stimuli [20]. When scientists choose how to present the given object (e.g. the size of the screen [21], the perspective from which it is seen [22], its luminance contrast levels [23], image quality [24]), they cannot be sure how such decisions affect the results of the conducted research. Similarly, research dealing with hypothetical issues—e.g. related to nonexistent interiors or alternative versions of an existing structure [25]—simply cannot be done in real-life surroundings. Due to technological limitations, scientists had to use visualizations that would then be displayed on a screen. Nowadays, when we have portable eye-trackers making it possible to display augmented reality stimuli or eye-trackers applicable in VR HMD (head mounted display) [26], should scientists dealing with the perception of material heritage give up on simplifying the cognitive scenario [27]? Should they stop using stationary eye trackers? To what extent do photomontages and photorealistic photos capture the specific atmosphere of a given monument [28]?

Gothic cathedral hierarchy

Research relating to Gothic architecture makes it possible to state that constructors of cathedrals aimed at a harmonious combination of form, function and message [29,30,31]. It is believed that alterations in the proportions of these buildings and the appearance of the details in them were directly related to the mystical significance of such spaces [32]. The same can be said about the altar. Presbytery, closing the axis of a church, is the most important part of such a structure [33]. The shape of the cathedral’s interior should facilitate focusing one’s eyesight on that great closure, making it easier for the congregation to concentrate on the religious ritual taking place there [29, 34]. It is the belief of various scholars that the evolution in thinking about the proportions of sacral buildings led medieval builders to deliberately lengthen the naves of their cathedrals to enhance the role of the altar. This theoretical assertion was confirmed by the author in her 2018 research dealing with the so-called longitudinal church effect [35].This experiment, employing eye trackers and flat stimuli proved that longer naves facilitated the participants’ concentration on the altar and its immediate surroundings; the viewers also spent less time looking to the sides and the way in which they moved their eyes was less dynamic. However, the conclusions of that research indicated a need for further methodological experiments [35] in the field of eye tracking related to the perception of architecture.

Eye tracking and heritage

Research centered on stimuli displayed in VR makes use of the same mechanisms as portable eye trackers. The difference is that fixations are not marked on a film recorded by a camera aimed at whatever the experiment’s participant is looking at, but registered in relation to objects seen by such a person in their VR goggles (https://vr.tobii.com/). Eye trackers combined with VR HMD remain a novelty, but such a combination has already been applied in research into historical areas [36], landscape and cityscape protection [37]. One should not neglect the variety of ways in which other types of eye trackers have been used in studies over cultural heritage. In the study of the perception of art, stationary eye-trackers [38], mobile eye-trackers [39] and those connected with VR [13] have already been used. This technology has been used to analyze perception of paintings [40], behaviors of museum visitors [41, 42], perception of historical monuments [43, 44], interiors [45], natural [46] and urbanized environments [47] perceived by different users [48]. It is not difficult to notice the growing interest in the so-called neuro–architecture [49].

Eye-tracking heritage belongs to this dynamically developing field [50]. There is a strong scientific motivation to have a better look at the pros and cons of this kind of research. Implementations of new technologies is often characterized by a lack of critical methodological approach [51]. The quality of data depends on multiple factors, one of which is the system in which the research is conducted [52]. Researchers who compare the characteristics of the data obtained with different types of ET have indicated the pros and cons of various environments used for gathering behavioral data [53,54,55,56]. For instance, what has been studied is the difference in how people perceive their surroundings when they are having a stroll through the city and just watching a recording from such a walk [53] and several fascinating differences and similarities were observed as far as the speed of visual reactions is concerned. Prof. Hayhoe’s team noticed a similar change when the act of looking at objects was changed from passive to active, which resulted in significantly shorter fixation times [57]. One might also wonder whether the results of comparison of passive and active perception will match the tendencies described by Haskins, Mentch, Botch et al. according to which the observations that allow head movement “increased attention to semantically meaningful scene regions, suggesting more exploratory, information-seeking gaze behavior” [56]. If it works that way, those viewers who are allowed to move their heads should spend most time looking at the presbytery and the vaults [35]. On this level, it is also possible to raise the question, whether the visual behavior will change in the same way as has been observed in the museum when comparing scan paths in VR and reality. Would there be a tendency to switch focus rapidly if the average fixation duration decreased in VR [54]?

In 2018, what was available was research using flat visualizations of cathedral interiors examined with a stationary eye tracker or experiments done in a real-life situation with a portable eye tracker. As of today, eye tracking devices and the software that accompanies them permit experiments in virtual reality environments. Undertaking the same topic using a more advanced tool might be not only interesting but scientifically necessary.

Materials and methods

Research aim

The most basic aim of the research was to compare the data presented in the aforementioned paper [35], where the experiment’s participants looked passively at flat images, with the results obtained in the new experiment employing VR technology, which allowed movement. The author intended to see whether her previous decision to use a stationary eye tracker and simplify the cognitive process was a valid scientific move. Did the research involving even photorealistic flat displays—photographs, photomontages, and visualizations of architecture—yield false results? This comparison is meant to bring us nearer to an understanding of the future of the use of stationary eye trackers in the care of heritage.

Basic assumption

The methodology of the VR experiment was as similar as possible to the one applied to the previous experiment involving a stationary eye tracker. The most important aspects were connected with selection of participants, presentation of stimuli, the number of additional displayed images and duration of analyzed visual reactions.

Research tools and software

The set used in the experiment consisted of HTC Vive goggles and Tobii Pro Integration eye tracker [58]. The virtual research space had been orientated using SteamVR Base Station 2.0. The eye-tracking registration was done on both eyes with a frequency of 120 Hz, accuracy of 0.5°, and the trackable field of view was 110°. Additional spherical images were produced using Samsung Gear 360. Tobii Pro Lab 360VR software was used in the experiment. The model of the cathedral and its versions, as well as the final stereoscopic panoramas were developed in Blender, version 2.68. Most of the materials come from free online sources. The remaining textures were created in Adobe Substance Painter.

Participants

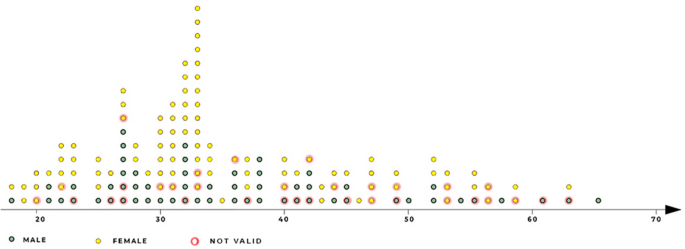

In accordance with the conclusions drawn from previous studies, the number of participants was increased. 150 people were invited to take part, nevertheless after careful data verification, only 117 recordings were considered useful. All volunteers were adults under 65 (Fig. 1), with at least a post-primary education, born and raised in Poland, living within the Wroclaw agglomeration. Being European, living in a large city, and obtaining at least basic education makes the observers more than likely to be familiar with the typical appearance of a Gothic interior.

Analogically to the previous research, the participants were only accepted if they had no education related to the field of research (i.e. historians of art, architects, city planners, conservators, and custodians were all excluded). The experience of professionals influences their perception of stimuli relevant to their field of practice [59, 60]. Another excluded group—on the basis of the information provided in their application form—were those who had already taken part in the previous research since they might remember the displayed stimuli and there would be no way of verifying to what extent that affected the results. The preliminary survey was also intended to exclude people with major diagnosed vision impairments—those with problems with color vision, strabismus, astigmatism, cataract, impaired eye mobility. Participants could use their contact lenses. However, no optometric tests were conducted to verify the participants' declarations. From the scientific perspective, it also seemed crucial that the participants have a similar approach to the employed VR environment. None of the participants possessed such a device. 102 volunteers had had no experience with VR goggles. The other 15 people had had some experience with VR, but of a very limited sort—only for 5–10 min and not more than twice in their lifetime. Therefore it is reasonable to claim that the participants constituted a fairly homogeneous group of people unfamiliar with the applied scientific equipment.

Personalization of settings and making the participants familiar with a new situation

One significant difference in relation to the original research was the fact that the device itself is placed on the head of a participant and that such a person is in turn allowed to move not only their eyes but also their neck or torso. A lot of time was spent on disinfecting the goggles, making sure the device is worn properly and taking care of the cables linking the goggles with a computer so that they obstructed the participants’ head movements as little as possible. (Some participants, especially petite women, found the device slightly uncomfortable due to its weight.) Three auxiliary spherical images, unrelated to the topic of the research, were used to adjust the spacing of the eye tracker lenses as well as to demonstrate the capabilities of the headset. This presentation was also intended to lessen the potential stress or awkwardness that might stem from being put in an unfamiliar situation; it also gave the researchers a chance to make sure the participants see everything clearly and feel comfortable. When both the participants and the researchers agreed that everything had been appropriately configured, the former were asked not to make any alterations or adjustments to either the headset or the chair once the experiment commenced. The methodological assumption was to stop the registration if the process of preparing participants after they had put on the goggles took longer than 10 min so that the effects of fatigue on the registration were insignificant. After the preparation was finished, one researcher observed the behavior of the participant and another researcher supervised the process of calibration and displaying of the presentation including the stimuli. Notes were made, both during and after the experiment, on the participants’ reactions or encountered difficulties. Participants touching the goggles and any perceived problem with stimulus display also led to the data being considered invalid (Fig. 1).

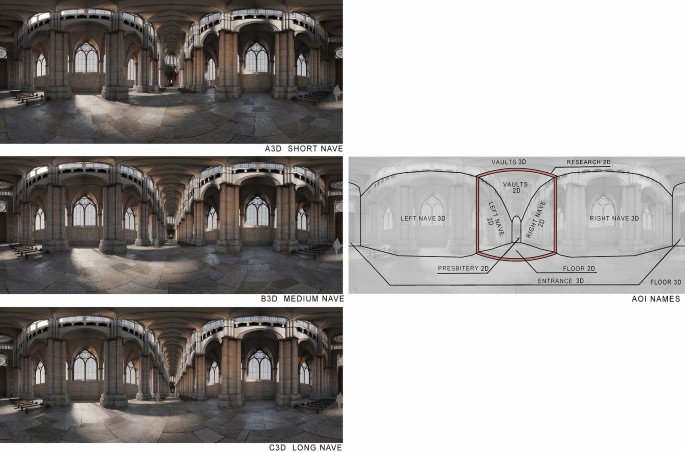

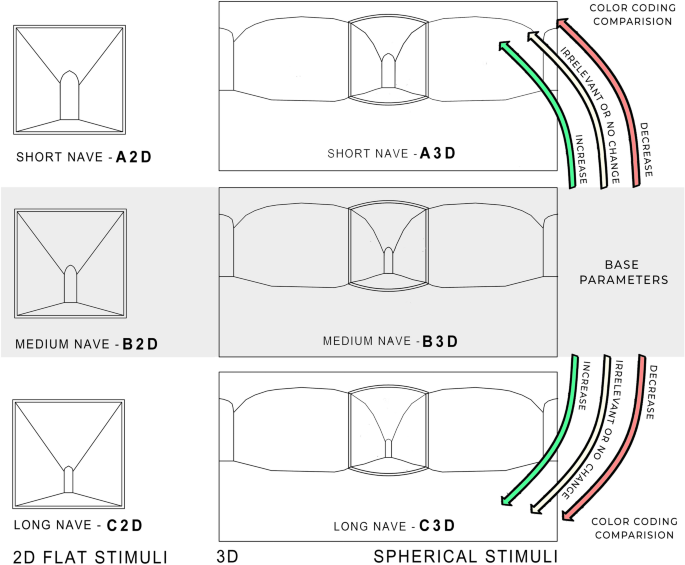

Used spherical stimuli

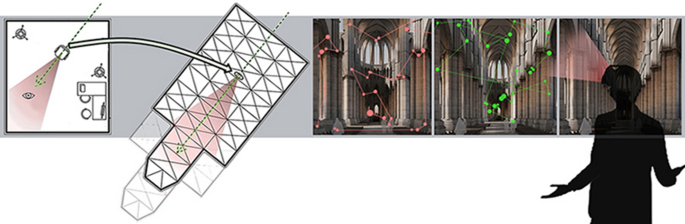

The inability to move decreases the level of immersion in the presented environment [61]. However, in order to make the comparison with the previously performed studies possible, the participants were not allowed to walk. For this reason, only stationary spherical images were used in the research (Fig. 2). The ratios, the location and the size of used details, materials, colors, contrasts, or intensity and angle at which the light is cast inside the interior were all identical to the ones used in the previous research. Just like then, three stimuli of varied nave length were generated. The ratios of the prepared visualizations were based on real buildings of this kind. The images were named as follows: A3D—cathedral with a short nave, B3D—cathedral with a medium-length nave, and C3D —cathedral with a long nave (Fig. 2).

Spherical visualizations and division into AOI. A3D—flattened spherical image prepared for the cathedral with a short nave, A3D spherical image for the cathedral with a short nave, which presents a part of the nave and the presbytery, B3D spherical image for the cathedral with a medium-length nave, C3D spherical image for the cathedral with a long nave, AOI INDICATORS—the manner of allocating and naming Areas of Interest. AOI names including the phrase 3D describe all elements not seen in the previous research. AOI names ending with 2D describe the elements shown in the previous research (Marta Rusnak on the basis of a visualization done by Wojciech Fikus).

Spherical images and flat images

The most striking difference between the two experiments is the scope of the image that the participants of the VR test were exposed to. The use of a spherical image made it possible to turn one’s head and look in all directions. Those who took part in the original test saw only a predetermined fragment of the interior. For the purpose of this study, this AOI was named “Research 2D”. The range of the architectural detail visible in the first test was marked with a red frame (Fig. 2). It might seem that the participants exposed to a flat image saw less than one-tenth of what the participants of the VR test were shown. This illusion stems from the nature of a flattened spherical image, which additionally enlarges the areas located close to the vantage point by strongly bending all nearby horizontal lines and surfaces. The same distortion is responsible for the fact that the originally square image here has a rounded top and bottom. An aspect where achieving homogeneous conditions proved difficult was the attempt to achieve the same balance of color, contrast and brightness on both the computer screen and the projector in the VR goggles. The settings—once deemed a satisfactory approximation of those applied in the original test – were not altered during the experiment.

Procedure

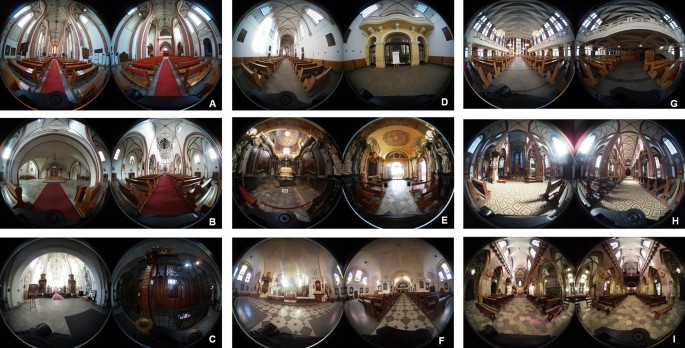

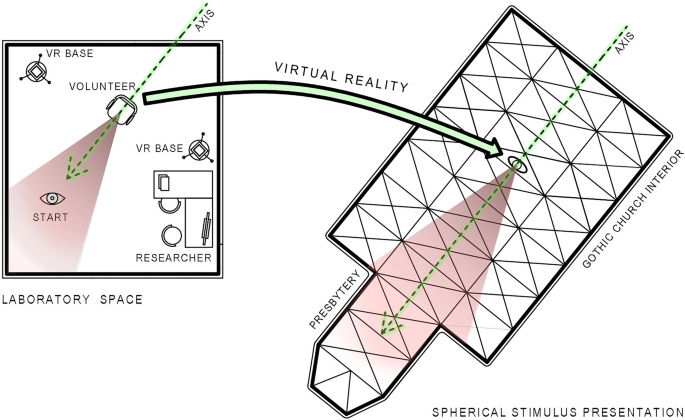

The experiment, like the original research, included nine auxiliary images (Fig. 3). The presented material consisted of spherical photos taken inside churches in Wrocław, Poland. Each image, including the photos and the visualizations, was prepared so that the presbytery in each case would be located in the same spot, on the axis, in front of the viewer (Fig. 4).

Auxiliary images. a Church of the Virgin Mary on Piasek Island in Wrocław. b Church of Corpus Christi in Wrocław. c Church of St. Dorothy in Wrocław. d Dominican Church in Wrocław. e Czesław's Chapel in the Dominican Church in Wrocław. f Church of St. Maurice in Wrocław. g Church of St. Ignatius of Loyola in Wrocław h. Church of St. Michael the Archangel in Wrocław I Cathedral in Wrocław. (fot. MR)

Additional illustrations play an important role. Firstly, they made it possible to ensure that the studied interiors were not displayed when the participants behaved in an uncertain manner, unaware of how to execute the task at hand. Additionally, all photos showed the interior of churches. So, just like believers or tourists entering the temple, the participants were not surprised by the interior's function.

Just like in the previous research it was important to make it impossible for the participants to use their short-term memory while comparing churches with different lengths of nave, so each participant’s set of images included only one of the images under discussion (A3D—short or B3D—medium-length or C3D—long). Therefore three sets of stimuli had been prepared. The auxiliary images ensured randomness since the visualization A3D, B3D or C3D appeared as one of the last three stimuli. Each included instructional boards informing the participants about the rules of the experiment and allowing an individual calibration of the eye tracker, one of the three analyzed images and nine auxiliary images in the form of the aforementioned spherical photos. Analogically to the original experiment, the participants were given the same false task—they were supposed to identify those displayed buildings which, in their opinion, were located in Wrocław. This was meant to incite a homogeneous cognitive intention among the participants [62] when they looked at a new, uniquely unfamiliar interior. Since it was expected that the VR technology would be a fairly new experience for the majority of the participants, it was decided that the prepared visualizations would not be shown as one of the first three stimuli in a set. Other images were displayed in random order.

During that previous test, a trigger point function of the BeGaze (SMI) software was used, which allowed automatic display of an image once the participant looked at a specific predefined zone on the additional board. In Tobii ProLab [58] such a feature was not available. All visual stimuli were separated from one another by means of an additional spherical image. When participants looked at a red dot, the decision to display the next image had to be made by the person supervising the entire process.

The time span of registration that had been calculated for the original study was kept unchanged and amounted to 8 s. Unusual study time results from the experiment conducted in 2016 [14]. Comparisons are thus possible. However, this proved insufficient for the participants—5 out of 7 volunteers invited for preliminary tests and not included in the group undergoing analysis, expressed the need to spend more time looking at the images. It was suggested that the brevity of the display made the experience uncomfortable. Therefore the duration of a single display was increased to 16 s, but only the first eight were taken into consideration and used in the comparison.

Preparation of the room

Looking at a spherical image in VR did not allow movement within the displayed stimuli therefore the participants were asked to sit, just like in the study involving a stationary eye tracker. A change in the position of the participant’s body would require a change in the height of the camera and, as a result, a change in the perspective and that might have an adverse effect on the experiment. The room was quiet and dimming the light was possible. The positions of the participant’s seat and the VR base unit were marked on the room’s floor so as to make it possible to check and, if necessary, correct accidental shifts of the equipment (Fig. 4). It was important since any shifts in the position of the equipment would affect the orientation of the stimuli in space, making the direction in which the participant is initially looking inconsistent with the axes of the displayed interiors.

Results

This section of the article describes the new results and then compares them to the previous observations of flat images.

Numerical data were generated using Tobii Pro Lab. Using the data from the registrations, collective numerical reports were prepared. Five main types of eye-tracking variables were analyzed: fixation count, visitors number, average fixation duration, total fixation duration, time to first fixation. Reports were generated automatically in Tobii Pro Lab. XLS files have been processed in Microsoft Excel and in Statistica 13.3. Graphs and diagrams were processed in PhotoShop CC2015.

In the end, biometric data was gathered correctly from 40 people who had been shown stimulus A3D, 40 people who had been shown stimulus B3D, and 37 people who had been shown stimulus C3D. That means that for various reasons 22% of registrations were deemed unusable for the purposes of the analysis (Fig. 1).

Due to the change in equipment and the environment of the experiment, more Areas of Interest were analyzed than during the previous study [35]. The way people looked at the spherical images was analyzed by dividing each of them into ten AOIs. Their location and names can be seen in Fig. 2. In the frame located in the center one may find AOIs with a caption saying “2D”—that is because they correspond to the AOIs used in the flat images in the first study. All these AOIs can be summed up into one area named Old Research. Five more AOIs, which are placed over the parts of the image that had not been visible to those exposed to the flat stimuli, were captioned with names ending with “3D”.

Fixation report was done for the entire spherical image but also for the five old and five new research AOIs (Table 1). The number of fixations performed on entire stimuli A3D, B3D and C3D is not significantly different. Many more fixations were done on the Old Research AOI. The number of fixations performed within the five new AOIs decreased as the interior lengthened, whereas the values for the fields visible in the previous test increased.

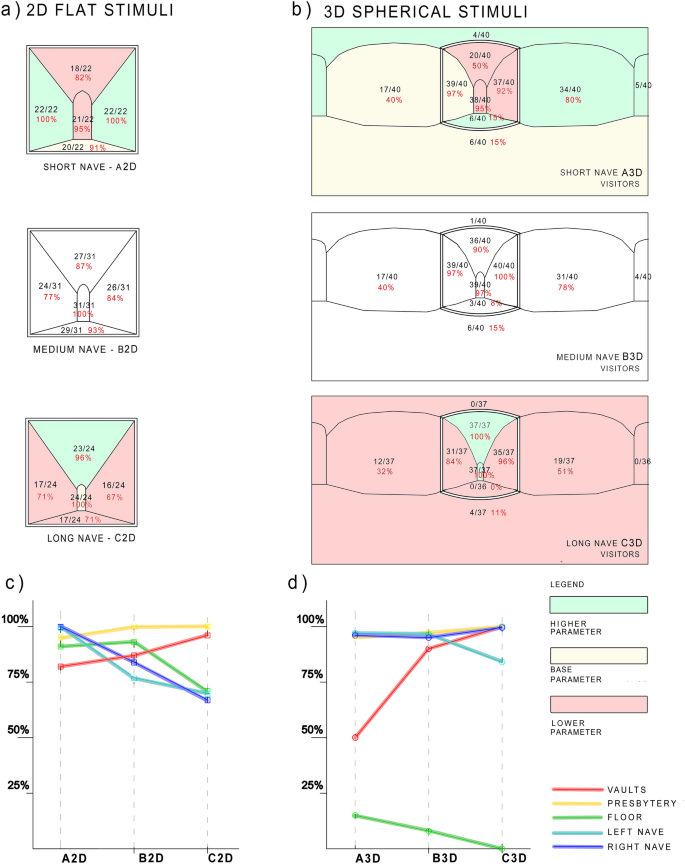

To determine interest in particular parts of a stimulus, we can look at how many times those parts were viewed. Table 2 lists all 10 analyzed AOIs and their values. When viewing the data, it is important to consider the different numbers of participants who were shown the examples. Therefore the numbers are additionally shown as percentages. On inspection of the first part of the table it is apparent that the values presented in the five columns of new AOIs represent a decreasing trend.

With the extension of the interior proportions, three old AOIs gain more and more attention. The largest and most significant difference can be seen in the number of observers of the Vaults 2D AOI. For Floor 2D AOI, and Left Nave 2D AOI the number is decreasing, while the number of people looking at the Presbytery AOI is slightly increasing.

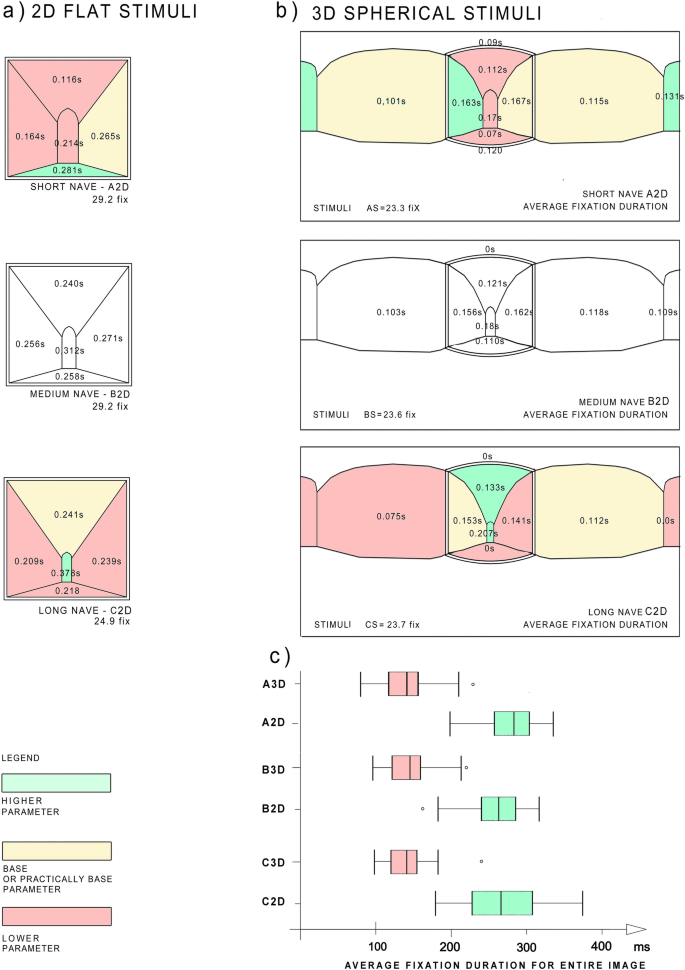

Another analyzed parameter is average fixation duration. Despite the noticed differences, the one-way ANOVA data analysis showed that all observed deviation in the fixation duration should be seen as statistically insignificant [ANOVA p > 0.05, due to the large number of groups compared, p values are placed in the Table 2 (line10 and 15)]. Despite this result, it is important that all three examples of the Presbytery 2D AOI have the longest-lasting fixations and for C3D the average fixation duration exceeded the value of 207 ms. This shows how visually important this AOI becomes for the interior with the longest nave.

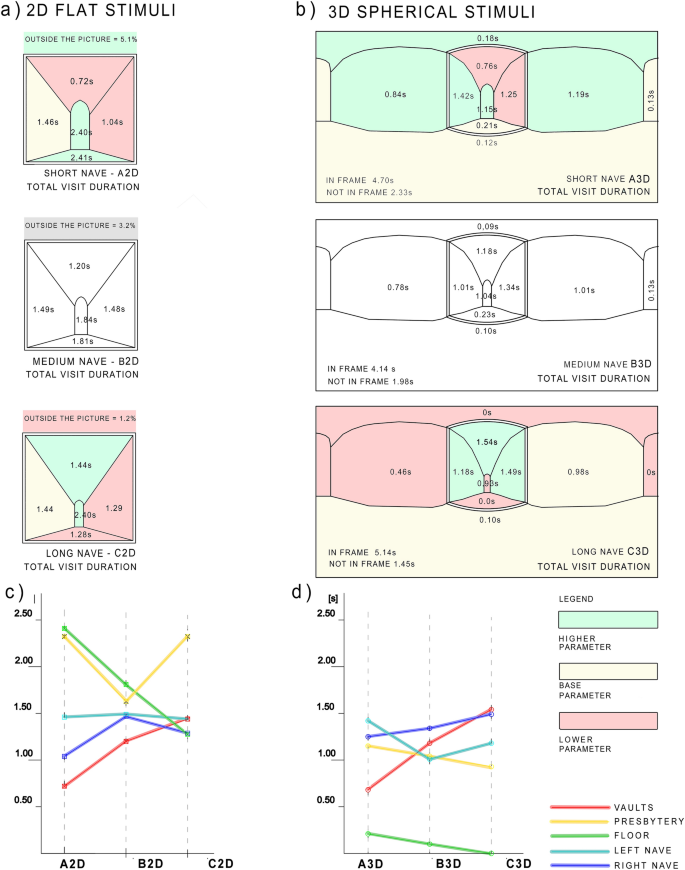

The last generally analyzed feature is total visit duration. By far the largest difference in its value was recorded for the Vaults 2D AOI. Those exposed to stimulus C3D spent twice as much time looking at the vaults as did those exposed to stimulus AS. For the longest interior the attention time also increased for Right Nave 2D AOI, while the Floor 3D AOI shows almost no changes in visit duration. Data analysis shows that all observed deviations concerning average fixation duration and total visit duration are statistically insignificant (ANOVA F(2,117) / p > 0.05, exact values are in the Table 2—line 10 and 15). This insignificance stems from the fact that the stimuli in question differ only slightly. The only thing that actually changes is the fragment of the main nave that is most distant from the observer. The data interpretation to follow is based on a general analysis of different aspects of visual behaviors and establishing increasing or decreasing tendencies between the AOIs for cases A3D, B3D and C3D. These observations are then juxtaposed with the relationships between stimuli observed in the 2D experiment. However, before engaging in a comparison, one needs to be certain that the cropped image chosen for the 2D experiment really consists of the area that those exposed to the interior looked at the longest.

One of the primary ideas behind doing research on the same topic again was to check the validity of the methodology used previously, including the author’s choice of how much of the interior was shown in the original stimuli. Should the participants of the VR experiment look for a very limited time at this area and instead find other parts of the building more attractive, it might suggest that some errors were made when making assumptions about either the previous research or the current one. In that case it would be impossible to make a credible comparison of the results obtained during those two experiments. A general analysis was therefore done for the Research 2D AOI. The value that perhaps best testifies to one’s cognitive engagement is the total visit duration calculated for all the AOIs visible in the previous research. The average value of this parameter amounted to 4.70 s, which is almost 59% of the registration span, for stimulus A3D; 4.14 s, which is 52% of registration span, for B3D; and 5.14 s, which is slightly over 64% of registration span, for C3D. What is interesting, an average of nearly ¾ of the participants’ fixations took place within the area presented in the previous research (70.1% to 75.9%) (Table 3).

That means that fixations within the Research 2D AOI were focused and that the participants made few fixations when looking to the sides or to the back. If those points of focus were more evenly distributed, one would be entitled to assume that no part of the interior drew attention in particular. However, these results suggest that the Research 2D AOI really did include the most important architectural elements in such a religious building as far as the impression made on an observer is concerned. This allows a further, more detailed analysis and comparison of the data obtained using a VR headset and those acquired by means of a stationary eye tracker.

Subsequent comparisons are made for single AOIs. In order to facilitate the comparison of the data gathered in both experiments, the names of the stimuli are expanded with distinctions of length: short cathedral A3D, medium-length cathedral B3D, long cathedral C3D. A graphic representation, which depicts all the AOIs for all three new spherical stimuli and three flat stimuli from old research, was prepared to allow a clear comparison of a large portion of numerical data. The upper part shows the schematics for the interior with a short nave (A2D and A3D), followed by the interiors with a medium-length nave (B2D and B3D), while the longest (C2D and C3D) are displayed at the bottom. Such graph was filled in with values corresponding to the given analyzed aspect: number of observers (Fig. 6), average fixation duration (Fig. 7), total visit duration (Fig. 8). Values inserted in the middle section were compared with the schemata shown above and below (Fig. 5). The range of the difference influenced the color-coding of the particular AOI, as determined in the attached legend. When the values were equal, the AOI was cream-colored. When the analysis showed a lower value, it was colored pink, and when an increase was noticed, it was colored green. Additional graphs, under described schemes, support the comparison.

Average fixation duration—comparison between interiors with short, medium-length and long naves. a 2D flat stimuli, b 3D special stimuli. c A box plot—comparison between fixation duration for the entire three new and three old stimuli. Each box was drawn from Q1—first quartile to Q3—third quartile with a vertical line denoting the median. Whiskers indicate minimum and maximum. Outliers were sown as points ± 1.5 interquartile range.

The volunteers were able to make a series of movements looking up, down, to the back or to the sides. Changes in such activity may be analyzed by the number of people looking at particular AOIs. Figure 5 shows the combination of the three schemata and also includes the number of observers for each AOI. The upper part shows green coloring of most newly added AOIs in stimulus A3D. That suggests an increased movement of the participants. The 3D AOIs in the bottom part of the figure, which shows the data for stimulus C3D, is dominated with red color. It is very easy to notice the gradual decrease in the number of participants looking in other directions than forwards when comparing those exposed to stimuli A3D, B3D, and C3D. At this level of generalization the new study confirms previous findings. The vault and the presbytery, for example, were most willingly observed by people looking at interior C2D. One can easily notice that the number of those looking to the sides decreased along with an increase in the length of the nave. Many more people neglected looking at the Left Nave 3D AOI. It is plain to see that the longest nave discouraged the observers from looking to the sides of the spherical image.

People observing 2D images on a screen also looked sideways more when the church was shorter and less when its nave was lengthened. It has to be pointed out that there were relatively few of those who looked to the back of the spherical image. Here one could observe the same tendency as with looking to the sides—the longer the nave, the smaller the need to divert one’s gaze from the central part of the image. That is perhaps why not even one out of 37 people exposed to C3D looked at the back of the image during the 8 s that were recorded and analyzed. Similarly to the results of the previous research, the longer the nave became, the fewer people chose to look upwards at the Vaults 3D AOI. More people were inclined to look at the Vaults 2D AOI as the nave got shorter, which is visible in the visitors count.

Despite the differences in results obtained in the 2D and 3D experiments, the main tendencies proved not to be contradictory to each other as one may see in the graphs in Fig. 5. It can be concluded that the most prominent deviation occurs in the Floor 3D AOI. It appears that the inability to look at other parts of the interior made the participants of the 2D study pay attention to the Floor AOI to a much greater extent. As the length of the nave grew, the attention paid to the Floor AOI decreased in both experiments. The deviations related to the other AOIs turned out to be not considerable. This seems particularly important in the case of the most important part of the interior—the presbytery. The author was afraid that the different nature of illustration switching (automatic in 2D versus manual in 3D) might adversely affect the number of participants looking at this AOI. However, nothing to that effect has been observed—the results for the Presbytery 2D AOI in both experiments are extremely similar.

Table 2 indicates that deviations in fixation duration are not statistically significant. Nevertheless, allowing a comparison of all fixations on all stimuli seems important (Fig. 7). Comparisons demonstrate many similarities between the 2D and 3D experiments.

In the 3D study the average duration of a single fixation within the Presbytery 2D AOI increases with the length of the nave. The duration of an average fixation for the Old Vaults AOI increases slightly as the nave gets longer. The same tendency was noticed in the research done on flat images even though the differences between the stimuli noticed in the current study were smaller in degree. In both cases visual magnetism of the vaults and the presbytery rises as they get further and further away from the observer.

A significant change should be noted in the average duration of a fixation for the entire image (A3D = 130 ms vs A2D = 290 ms, B3D = 136 ms vs B2D = 263 ms, C3D = 139 ms vs C2D = 271 ms). The average duration of fixations recorded for images displayed on the screen was twice as big as for those shown in the VR environment. A similar trend of shortening the duration of fixations from cognitive fixations (150–900 ms) into express fixations (90–150 ms) [63], although not as striking as in the research under discussion was noticed in VR by Gulhan et al. [54].

The comparison of the time the participants spent looking at individual AOIs in the 2D and 3D studies turned out to be very important. It allows one to clearly assess the impact of the way the interior is presented on how it is perceived (Fig. 8).

In the 2018 experiment the time the participants spent looking outside the screen would decrease along with the increase in the interior’s length. It was interpreted as a sign of boredom—the longer interior was slightly more complex and therefore kept the participants engaged for a longer period of time. In the 3D experiment those exposed to the stimulus with the medium-length and the longest nave respectively spent less and less time looking outside the Research 2D AOI. Such results, very similar in both experiments, allow one to state that the lengthening of the cathedral’s interior helps keep the interest of participants in the Presbytery 2D AOI and the areas closest to it.

Most AOIs have maintained a decreasing or increasing direction of change. However, one tendency was altered and seems contradictory to the hypothesis being verified. In the VR tests it turned out that the more distant—and therefore smaller—the presbytery was in the image, the less time on average the participants spent looking at it. This tendency could not be observed in the previous study.

Diagrams and graphs in Fig. 8 facilitate the comparison of the total visit duration results for the 2D and 3D tests. The diagram on the right shows more regularity. The analysis of the graphs show below makes it possible to see that there are two deviations in the graph on the left, of which the one related to the presbytery causes the most concern. In the case of the data from the VR recording, four out of five dependencies are regular. The scale of the irregularity related to the Left Nave 2D AOI in the VR experiment (bright blue line) is similar to the Right Nave 2D (dark blue line) in the 2D experiment. Large differences in the shape of the green and yellow lines in the charts (Fig. 8c, d) that reflect how the total visit duration changed for the Floor 2D AOI and the Presbytery 2D AOI indicate that these two areas require analysis. The noticed deviations, in contrast to other AOI visible in both experiments, proved statistically significant (ANOVA, floor F(5,193) = 38,29; p = 0.034/presbytery F(5,193) = 78,21 p = 0.041). The attention paid to the floor is distinctly lower for all spherical stimuli, but decreases along with the increase in the length of the interior in both the 2D and 3D studies. On the other hand, the tendencies that characterize the visual attention paid to the presbytery in the flat-image research and in the research done on spherical images are visibly different. It is extremely interesting that astonishingly similar results were obtained for the Vaults 2D AOI (red lines).

Discussion

In comparison to other eye-tracking tests mentioned in the introduction, the fact that useful data was obtained from 117 people is more than satisfactory. From a traditional point of view, the sample is small so the research has potentially low power, which minimizes the likelihood of reproducibility. This test also has a weakness of evaluating only three variations of one particular case study, which in addition is a computer-generated image and not a real-life interior. scientifically beneficial. Should one come up with such a three-part experiment, the methodology could be prepared with more precision and deliberation.

A similar experiment carried out on the basis of an actual interior and involving three different recording methods—stationary, mobile and VR eye tracking—would be very interesting and.

A different way of switching between successive stimuli in the 2D and 3D tests also may have affected the results of the comparison as far as the visual attention paid to the presbytery is concerned. Since in the 3D test it was not possible to establish field triggers that would automatically switch the images, one potential result is the lower number of fixations within the Presbytery 2D AOI. However, as evidenced by the comparison of the graphs in Fig. 6, this difference in the switching method could not have had a major impact.

Despite using the same graphics program for both the 2D and 3D visualizations, the differences in the quality of the images displayed in two different environments in the two parts of the experiment may have influenced the results [64]. However, it must be considered as an element that cannot be separated from the chosen diagnostic tool. The construction of the VR goggles disturbs the assessment of distance regardless of the graphic quality of the displayed stimuli [65]. Unfamiliarity with the new technology may also create some minor difficulties as many people seem to find judging distances in VR difficult [66, 67]. The fact that most deviations are not accidental and rather logically related to the previous test seems to suggest that the influence of this aspect on the research results was not significant.

Another element that might have influenced the results of the VR research was physical discomfort—mostly neck fatigue—connected with the weight of the device the participants put on their heads and observed in other cases of VR eye-tracking research [68, 69]. However, the participants shown all three stimuli were equally exposed to this inconvenience, which makes it fairly impossible to affect the results and therefore the validity of the research.

The values discussed in the paper suggest that, even though a flat image is a major simplification of a real-life cognitive situation, the dominating reactions of the participants were sufficiently similar. Even though some differences can be observed, it is possible and justified to draw the same conclusion—the observers’ attention was directed much more effectively at the presbytery when the church’s interior was longer. Only three of the numerous analyzed features did not support the hypothesis of the existence of the longitudinal church effect. Even though the convergence is not perfect, it can be asserted that science confirms the genius of medieval thinkers and architects.

The amount of time the volunteers spent looking at the vaults did not change much between the two experiments. According to the comparative analysis, a shorter fixation time was observed in 3D. Fixation duration is usually regarded as an important indicator of visual engagement [70]. Aside from the examples of A2D and A3D, there were no significant differences in the number of observers who desired to view the vaults. 82% of participants observed vaults visible in A2D, while 50% of observers observed vaults in A3D (Fig. 6). This does not support the finding of Amanda Haskins’s team according to which an area attractive in a 2D experiment will prove even more attractive in 3D [56]. The results obtained in the research under discussion shows that AOIs that were visually attractive in the 2D test remained to a large extent equally attractive in the 3D test, whereas the AOIs that were less visually appealing in 2D, became even less appealing in 3D since the attention they would receive was divided between them and AOIs that did not exist in the 2D experiment. The fact that much larger deviations are noticed in 2D than in 3D suggests that it is preferable to study and discuss perception of architecture on the basis of 3D images or—should 2D need to be employed—using not one single flat image but several of its variations (just like three interiors of different length were used in this research) to facilitate more detailed and more precise comparison that in the end will result in more credible conclusions.

Conclusions

Despite the previously mentioned drawbacks and numerous doubts about research conducted with VR sets, the results obtained with spherical stimuli showed greater regularity than those collected during the 2D research. With the popularization and development of VR, some disadvantages of this technology are likely to diminish or disappear. Although 3D stimuli seem to be a better choice, the study does not assert that flat stimuli and stationary eye trackers should be abandoned as far as architectural research is concerned. The results of the comparative analysis also indicate that it is better not to derive conclusions from the data obtained from one single image but rather from a set of the given image’s slightly modified versions. If that is for some reason impossible, the absence of other values available for comparison makes evaluating and drawing correct conclusions fairly unreasonable. For this reason, it is advisable to use stationary eye tracking for research on issues related to cultural heritage that are less specific and more general.

The composition presented in the study has a simple, symmetrical layout with rhythmically repeating elements. In this way, a flat representation of the interior resulted in a reasonable interpretation of the results. It can be assumed that the more complex the composition to be analyzed with an eye tracker, the greater the impact of deviations resulting from the flat representation of the image.

Moreover, since the deviations in the gaze paths between a flat and spherical representation of the same faithfully modeled interior are easily noticeable, it seems obvious that they would be much greater should one use sketches, drawings or paintings instead of more real-life, photo-realistic stimuli. It appears only reasonable that implementation of such images would further distort the credibility of the obtained data and therefore they should not be employed in research on the visual perception of architecture or other tangible heritage. Studies that have had this form should be reviewed.

Data availability

The of datasets generated from 68 participants and analyzed during the current study are available in the RepOD repository. Marta Rusnak, Problem of 2D representation of 3D objects in architectural and heritage studies. Re-analysis of phenomenon of longitudinal church, RepOd V1; A lack of consent from all participants means other eye-tracking data are not publicly available. In line with the applicable legal and ethical principles, such a right belonged to volunteers. All graphic materials are included in the supplement. Study consent forms are preserved in the WUST archives.

Abbreviations

- ET:

-

Eye-tracker

- VR:

-

Virtual reality

- AR:

-

Augmented reality

- 2D:

-

Two dimensional

- 3D:

-

Three dimensional

- AOI:

-

Area of Interest

References

Dieckie G. Is psyhology relevant to aesthetics? Philos Rev. 1962;71:285–302. https://doi.org/10.2307/2183429.

Makin A. The gap between aesthetic science and aesthetic experience. Journal of Consciousness Studies 2017; 24 (1-2):184-213;

Zeki S, Bao Y, Pöppel E. Neuroaesthetics: the art, science, and brain triptych. Psych J. 2020;9:427–8. https://doi.org/10.1002/pchj.383.

di Dio C, Vittorio G. Neuroaesthetics: a review. Curr Opin Neurobiol. 2009;19(6):682–7. https://doi.org/10.1016/j.conb.2009.09.001.

Poole A, Ball L. Eye tracking in human-computer interaction and usability research: current status and future prospects. In: Encyclopedia of human computer interaction. 2006. p. 211–9. https://doi.org/10.1016/B978-044451020-4/50031-1

Duchowski AT. Eye tracking methodology theory and practice. London: Springer-Verlag; 2007.

Holmqvist K, Nyström M, Andersson R, Dewhurst R, Jarodzka H, van de Weijer J. Eye tracking. A comprehensive guide to methods and measure. Oxford: Oxford University Press; 2011.

Michael I, Ramsoy T, Stephens M, Kotsi F. A study of unconscious emotional and cognitive responses to tourism images using a neuroscience method. J Islam Mark. 2019;10(2):543–64. https://doi.org/10.1108/JIMA-09-2017-0098.

Dalby Kristiansen E, Rasmussen G. Eye-tracking recordings as data in EMCA studies: exploring possibilities and limitations. Social Interact Video-Based Studies Hu Social. 2021. https://doi.org/10.7146/si.v4i4.121776.

Graham J, North LA, Huijbens EH. Using mobile eye-tracking to inform the development of nature tourism destinations in Iceland. In: Rainoldi M, Jooss M, editors. Eye Tracking in Tourism. Cham: Springer International Publishing; 2020. p. 201–24.

Han E. Integrating mobile eye-tracking and VSLAM for recording spatial gaze in works of art and architecture. Technol Arch Design. 2021;5(2):177–87. https://doi.org/10.1080/24751448.2021.1967058.

Chadalavada RT, Andreasson H, Schindler M, Palm R, Lilienthal AJ. Bi-directional navigation intent communication using spatial augmented reality and eye-tracking glasses for improved safety in human–robot interaction. Robo Computer-Integrated Manufact. 2020;61: 101830. https://doi.org/10.1016/j.rcim.2019.101830.

Campanaro DM, Landeschi G. Re-viewing Pompeian domestic space through combined virtual reality-based eye tracking and 3D GIS. Antiquity. 2022;96:479–86.

Rusnak M, Fikus W, Szewczyk J. How do observers perceive the depth of a Gothic cathedral interior along with the change of its proportions? Eye tracking survey. Architectus. 2018;53:77–88.

Francuz P. Imagia. Towards a neurocognitive image theory. Lublin: Katolicki Uniwersytet Lubelski; 2019.

Walker F, Bucker B, Anderson N, Schreij D, Theeuwes J. Looking at paintings in the Vincent Van Gogh Museum: eye movement patterns of children and adults. PLoS ONE. 2017. https://doi.org/10.1371/journal.pone.0178912.

Mitrovic A, Hegelmaier LM, Leder H, Pelowski M. Does beauty capture the eye, even if it’s not (overtly) adaptive? A comparative eye-tracking study of spontaneous attention and visual preference with VAST abstract art. Acta Physiol (Oxf). 2020;1(209): 103133. https://doi.org/10.1016/j.actpsy.2020.103133.

Jankowski T, Francuz P, Oleś P, Chmielnicka-Kuter E, Augustynowicz P. The Effect of painting beauty on eye movements. Adv Cogn Psychol. 2020;16(3):213–27. https://doi.org/10.5709/acp-0298-4.

Ferretti G, Marchi F. Visual attention in pictorial perception. Synthese. 2021;199(1):2077–101. https://doi.org/10.1007/s11229-020-02873-z.

Coburn A, Vartanian O, Chatterjee A. Buildings, beauty, and the brain: a neuroscience of architectural experience. J Cogn Neurosci. 2017;29(9):1521–31. https://doi.org/10.1162/jocn_a_01146.

Al-Showarah S, Al-Jawad N, Sellahewa H. Effects of user age on smartphone and tablet use, measured with an eye-tracker via fixation duration, scan-path duration, and saccades proportion. In: Al-Showarah S, Al-Jawad N, Sellahewa H, editors. Universal access in human-computer interaction universal access to information and knowledge: 8th international conference, UAHCI 2014, Held as Part of HCI International 2014, Heraklion, Crete, Greece, June 22-27, 2014, Proceedings, Part II. Cham: Springer; 2014. p. 3–14.

Todorović D. Geometric and perceptual effects of the location of the observer vantage point for linear-perspective images. Perception. 2005;34(5):521–44. https://doi.org/10.1068/p5225.

Itti L, Koch C. Computational modelling of visual attention. Nat Rev Neurosci. 2001;2(3):194–203. https://doi.org/10.1038/35058500.

Redi J, Liu H, Zunino R, Heynderickx I. Interactions of visual attention and quality perception. ProcSPIE. 2011. https://doi.org/10.1117/12.876712.

Rusnak M. Eye-tracking support for architects, conservators, and museologists. Anastylosis as pretext for research and discussion. Herit Sci. 2021;9(1):81. https://doi.org/10.1186/s40494-021-00548-7.

Clay V, König P, König SU. Eye tracking in virtual reality. J Eye Mov Res. 2019. https://doi.org/10.16910/jemr.12.1.3.

Brielmann AA, Buras NH, Salingaros NA, Taylor RP. What happens in your brain when you walk down the street? implications of architectural proportions, biophilia, and fractal geometry for urban science. Urban Sci. 2022; 6(1):3 https://doi.org/10.3390/urbansci6010003.

Böhme G. Atmospheric architectures: the aesthetics of felt spaces brings. Engels-Schwarzpaul T, editor. London, Oxford, New York: Bloomsbury; 2017.

Panofsky E. Architecture gothique et pensée scolastique précédé de L’abbé Suger de Saint-Denis, Les Edition de Minuit, Alençon . Alençon : Les Edition de Minuit; 1992.

Henry-Claude M. SL, ZY,. Henry-Claude M., Stefanon L., Zaballos Y., Principes et éléments de l’architecture religieuse médievale, . Gavaudun : Fragile; 1997.

Scot RA. The Gothic Enterprise. Guide to understand the Medieval Cethedral. California: University of California Press; 2003.

Erlande-Brandenburg A. MBAB,. Histoire de l’architecture Française. Du moyen Age à la Reinaissance: IVe siècle–début XVIe siècle, Caisse nationale des monuments historiques et des sites. Paris : Mengès; 1995.

Norman E. The house of god. Goring by the sea, Sussex: Thames & Hudson; 1978.

Duby G, Levieux E. The age of the cathedrals: art and society, 980–1420. Chicago: University of Chicago Press; 1983.

Rusnak M, Chmielewski P, Szewczyk J. Changes in the perception of a presbytery with a different nave length: funnel church in eye tracking research. Architectus. 2019;2:73–83.

Zhang L, Jeng T, Zhang RX. Integration of virtual reality, 3-D eye-tracking, and protocol analysis for re-designing street space. In: Alhadidi S, Crolla K, Huang W, Janssen P, Fukuda T, editors. CAADRIA 2018 - 23rd international conference on computer-aided architectural design research in Asia learning, prototyping and adapting. 2018.

Zhang RX, Zhang LM. Panoramic visual perception and identification of architectural cityscape elements in a virtual-reality environment. Futur Gener Comput Syst. 2021;118:107–17. https://doi.org/10.1016/j.future.2020.12.022.

Crucq A. Viewing patterns and perspectival paintings: an eye-tracking study on the effect of the vanishing point. J Eye Mov Res. 2021. https://doi.org/10.16910/jemr.13.2.15.

Raffi F. Full Access to Cultural Spaces (FACS): mapping and evaluating museum access services using mobile eye-tracking technology. Ars Aeterna. 2017;9:18–38. https://doi.org/10.1515/aa-2017-0007.

Mokatren M, Kuflik T, Shimshoni I. Exploring the potential of a mobile eye tracker as an intuitive indoor pointing device: a case study in cultural heritage. Futur Gener Comput Syst. 2018;81:528–41. https://doi.org/10.1016/j.future.2017.07.007.

Jung YJ, Zimmerman HT, Pérez-Edgar K. A methodological case study with mobile eye-tracking of child interaction in a science museum. TechTrends. 2018;62(5):509–17. https://doi.org/10.1007/s11528-018-0310-9.

Reitstätter L, Brinkmann H, Santini T, Specker E, Dare Z, Bakondi F, et al. The display makes a difference a mobile eye tracking study on the perception of art before and after a museum’s rearrangement. J Eye Mov Res. 2020. https://doi.org/10.16910/jemr.13.2.6.

Rusnak M, Szewczyk J. Eye tracker as innovative conservation tool. Ideas for expanding range of research related to architectural and urban heritage. J Herit Conserv. 2018;54:25–35.

de la Fuente Suárez LA. Subjective experience and visual attention to a historic building: a real-world eye-tracking study. Front Architect Res. 2020;9(4):774–804. https://doi.org/10.1016/j.foar.2020.07.006.

Rusnak M, Ramus E. With an eye tracker at the Warsaw Rising Museum: valorization of adaptation of historical interiors. J Herit Conserv. 2019;58:78–90.

Junker D, Nollen Ch. Mobile eyetracking in landscape architecture. Analysing behaviours and interactions in natural environments by the use of innovative visualizations. In: Proceeding of the international conference “Between Data and Science” Architecture, neuroscience and the digital worlds. 2017.

Kabaja B, Krupa M. Possibilities of using the eye tracking method for research on the historic architectonic space in the context of its perception by users (on the example of Rabka-Zdrój). Part 1. Preliminary remarks. J Herit Conserv. 2017;52:74–85.

Kiefer P, Giannopoulos I, Kremer D, Schlieder C, Martin R. Starting to get bored: An outdoor eye tracking study of tourists exploring a city. In: Eye Tracking Research and Applications Symposium (ETRA). 2014.

Karakas T, Yildiz D. Exploring the influence of the built environment on human experience through a neuroscience approach: a systematic review. Front Archit Res. 2020;9(1):236–47. https://doi.org/10.1016/j.foar.2019.10.005.

Mohammadpour A, Karan E, Asadi S, Rothrock L. Measuring end-user satisfaction in the design of building projects using eye-tracking technology. Austin, Texas: American Society of Civil Engineers; 2015.

Dupont L, Ooms K, Duchowski AT, Antrop M, van Eetvelde V. Investigating the visual exploration of the rural-urban gradient using eye-tracking. Spat Cogn Comput. 2017;17(1–2):65–88. https://doi.org/10.1080/13875868.2016.1226837.

Holmqvist K, Nyström M, Mulvey F. Eye tracker data quality: what it is and how to measure it. in: proceedings of the symposium on eye tracking research and applications. New York. Association for computing machinery; 2012. p. 45–52. (ETRA ’12). https://doi.org/10.1145/2168556.2168563

Foulsham T, Walker E, Kingstone A. The where, what and when of gaze allocation in the lab and the natural environment. Vision Res. 2011;51(17):1920–31. https://doi.org/10.1016/j.visres.2011.07.002.

Gulhan D, Durant S, Zanker JM. Similarity of gaze patterns across physical and virtual versions of an installation artwork. Sci Rep. 2021;11(1):18913. https://doi.org/10.1038/s41598-021-91904-x.

van Herpen E, van den Broek E, van Trijp HCM, Yu T. Can a virtual supermarket bring realism into the lab? Comparing shopping behavior using virtual and pictorial store representations to behavior in a physical Store. Appetite; 2016;107,196-207. https://doi.org/10.1016/j.appet.2016.07.033.

Haskins AJ, Mentch J, Botch TL, Robertson CE. Active vision in immersive, 360° real-world environments. Sci Rep. 2020;10: art. nr 14304. https://doi.org/10.1038/s41598-020-71125-4.

Hayhoe MM, Shrivastava A, Mruczek R, Pelz JB. Visual memory and motor planning in a natural task. J Vis. 2003;3(1):49–63. https://doi.org/10.1167/3.1.6.

https://www.tobiipro.com/. (accessed 10.05.2022)

Francuz P, Zaniewski I, Augustynowicz P, Kopí N, Jankowski T, Jacobs AM, et al. Eye movement correlates of expertise in visual arts. Front Hum Neurosci. 2018;12:87. https://doi.org/10.3389/fnhum.2018.00087.

Koide N, Kubo T, Nishida S, Shibata T, Ikeda K. Art Expertise reduces influence of visual salience on fixation in viewing abstract-paintings. PLoS ONE. 2015;10(2): e0117696. https://doi.org/10.1371/journal.pone.0117696.

Pangilinan E, LS& M v. Creating augmented and virtual realities: theory and practice for next-generation spatial computing. sebastopol: O’Reilly Media; 2019.

Tatler BW, Wade NJ, Kwan H, Findlay JM, Velichkovsky BM. Yarbus, eye movements, and vision. Iperception. 2010;1(1):382.

Galley N, Betz D, Biniossek C. Fixation durations—why are they so highly variable? . In: Advances in visual perception research. Thomas Heinen. Hildesheim: Nova Biomedicaal; 2015. p. 83–106.

Stevenson N, Guo K. Image valence modulates the processing of low-resolution affective natural scenes. Perception. 2020;49(10):1057–68. https://doi.org/10.1177/0301006620957213.

Thompson WB, Willemsen P, Gooch AA, Creem-Regehr SH, Loomis JM, Beall AC. Does the quality of the computer graphics matter when judging distances in visually immersive environments? Presence Teleoperators Virtual Environ. 2004;13(5):560–71. https://doi.org/10.1162/1054746042545292.

Choudhary Z, Gottsacker M, Kim K, Schubert R, Stefanucci J, Bruder G, et al. Revisiting distance perception with scaled embodied cues in social virtual reality. In: Proceedings—2021 IEEE Conference on Virtual Reality and 3D User Interfaces, VR 2021. 2021. p. 788–97. https://doi.org/10.1109/VR50410.2021.00106

Jamiy FE, Ramaseri CAN, Marsh R. Distance accuracy of real environments in virtual reality head-mounted displays. In: 2020 IEEE international conference on electro information technology (EIT). 2020. p. 281–7. https://doi.org/10.1109/EIT48999.2020.9208300

McGill M, Kehoe A, Freeman E, Brewster SA. Expanding the bounds of seated virtual workspaces. ACM Trans Comput-Hum Interact (TOCHI). 2020;27:1–40. https://doi.org/10.1145/3380959.

Mon-Williams M, Plooy A, Burgess-Limerick R, Wann J. Gaze angle: a possible mechanism of visual stress in virtual reality headsets. Ergonomics. 1998;41(3):280–5. https://doi.org/10.1080/001401398187035.

Nuthmann A, Smith T, Engbert R, Henderson J. CRISP: a computational model of fixation durations in scene viewing. Psychol Rev. 2010;117(2):382–405. https://doi.org/10.1037/a0018924.

Acknowledgements

Cooperation: Hardware consultation: Ewa Ramus (Neuro Device, Warszawa, Poland), graphic cooperation: Wojciech Fikus (Wroclaw University of Science and Technology, Poland), data collecting: Mateusz Rabiega (Wroclaw University of Science and Technology, Poland), Magdalena Nawrocka (Wroclaw University of Science and Technology, Poland), Małgorzata Cieślik (independent, Wrocław, Poland), Daria Kruczek (independent, London, UK). The author would like to thank Ewa Ramus, Neuro Device and the technology company Tobii for the opportunity to develop an initial prototype of VR eye-tracking implementation. It is worth mentioning the support of Andrzej Żak. Thank you Marcin!

Human participation statement: WUST Ethical Committee approved the experiments and its protocols. Informed consent was obtained from all participants.

Funding

This work was supported by the National Science Center, Poland (NCN) under Miniatura Grant [2021/05/X/ST8/00595].

Author information

Authors and Affiliations

Contributions

MR- methodology, experiment design, data acquisition and analysis, writing. Author read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Rusnak, M. 2D and 3D representation of objects in architectural and heritage studies: in search of gaze pattern similarities. Herit Sci 10, 86 (2022). https://doi.org/10.1186/s40494-022-00728-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40494-022-00728-z